Platform paper social experiment analysis: Part 2#

This guide continues the behavioural data analysis from experiments social0.2, social0.3, and social0.4, introduced in Platform paper social experiment analysis: Part 1, where we outlined the experimental design and provided provided setup instructions for running the analysis.

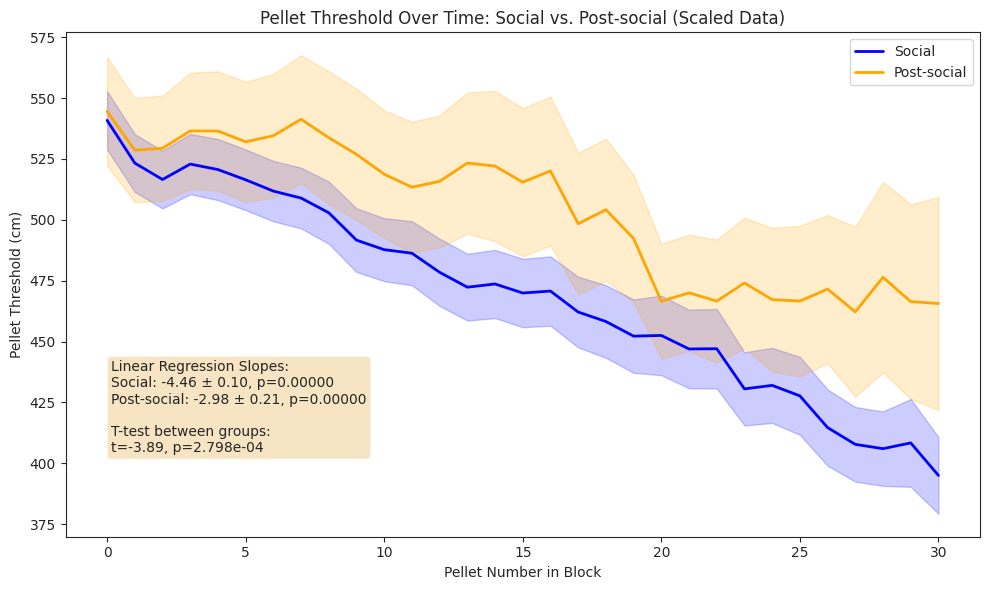

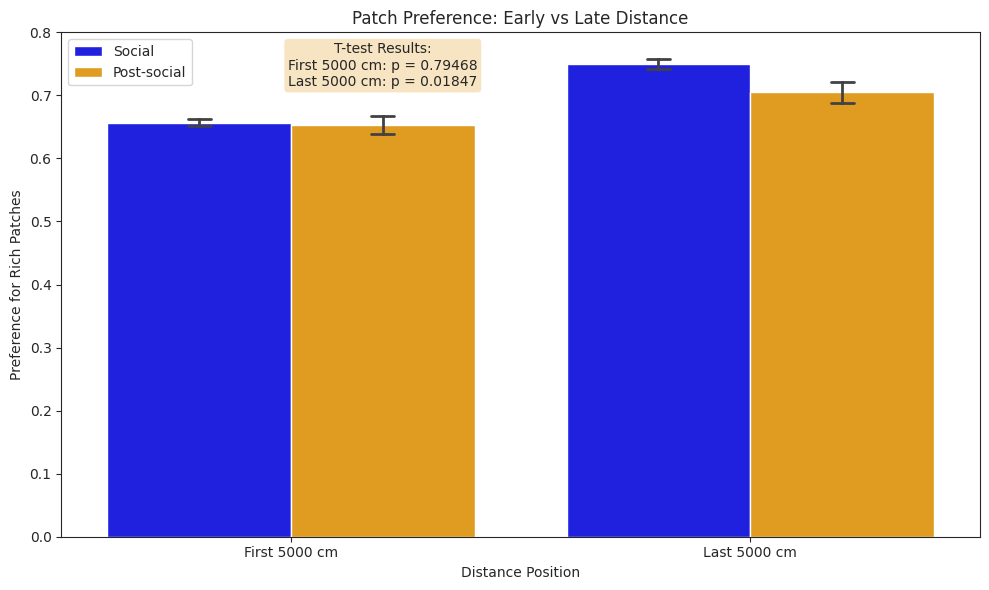

Here, we assume the environment is already active and focus on comparing solo and social behaviours—specifically exploration, sleep, foraging, and drinking—as well as solo and social learning, including changes in foraging efficiency over time and patch preference, quantified as the probability of being in the poor patch.

See also

DataJoint pipeline: Fetching data as DataFrames and DataJoint pipeline: Computing behavioural bouts for details on how we quantify exploration, sleep, foraging, and drinking bouts based on position data.

Import libraries and define variables and helper functions#

from pathlib import Path

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import seaborn as sns

from scipy import stats

from scipy.ndimage import uniform_filter1d

from tqdm.auto import tqdm

The hidden cells below define helper functions, constants, and setup variables used throughout the notebook. They must be run, but are hidden for readability.

Show code cell source

Hide code cell source

# Plot settings

sns.set_style("ticks")

# Constants

cm2px = 5.2 # 1 cm = 5.2 px roughly in aeon arenas

light_off, light_on = 7, 20 # 7am to 8pm

patch_type_mean_map = {100: "l", 300: "m", 500: "h", 200: "l", 600: "m", 1000: "h"}

patch_type_rate_map = {

0.01: "l",

0.0033: "m",

0.002: "h",

0.005: "l",

0.00167: "m",

0.001: "h",

}

experiments = [

{

"name": "social0.2-aeon3",

"presocial_start": "2024-01-31 11:00:00",

"presocial_end": "2024-02-08 15:00:00",

"social_start": "2024-02-09 16:00:00",

"social_end": "2024-02-23 13:00:00",

"postsocial_start": "2024-02-25 17:00:00",

"postsocial_end": "2024-03-02 14:00:00",

},

{

"name": "social0.2-aeon4",

"presocial_start": "2024-01-31 11:00:00",

"presocial_end": "2024-02-08 15:00:00",

"social_start": "2024-02-09 17:00:00",

"social_end": "2024-02-23 12:00:00",

"postsocial_start": "2024-02-25 18:00:00",

"postsocial_end": "2024-03-02 13:00:00",

},

{

"name": "social0.3-aeon3",

"presocial_start": "2024-06-08 19:00:00",

"presocial_end": "2024-06-17 13:00:00",

"social_start": "2024-06-25 11:00:00",

"social_end": "2024-07-06 13:00:00",

"postsocial_start": "2024-07-07 16:00:00",

"postsocial_end": "2024-07-14 14:00:00",

},

{

"name": "social0.4-aeon3",

"presocial_start": "2024-08-16 17:00:00",

"presocial_end": "2024-08-24 10:00:00",

"social_start": "2024-08-28 11:00:00",

"social_end": "2024-09-09 13:00:00",

"postsocial_start": "2024-09-09 18:00:00",

"postsocial_end": "2024-09-22 16:00:00",

},

{

"name": "social0.4-aeon4",

"presocial_start": "2024-08-16 15:00:00",

"presocial_end": "2024-08-24 10:00:00",

"social_start": "2024-08-28 10:00:00",

"social_end": "2024-09-09 01:00:00",

"postsocial_start": "2024-09-09 15:00:00",

"postsocial_end": "2024-09-22 16:00:00",

},

]

periods = ["social", "postsocial"]

# Define the possible combos of social and light

combos = [

(True, True), # Social + Light

(True, False), # Social + Dark

(False, True), # Solo + Light

(False, False), # Solo + Dark

]

# Define colors based on light condition (light=blue, dark=orange)

colors = {

True: "#1f77b4", # Blue for light conditions

False: "#ff7f0e", # Orange for dark conditions

}

# Define hatch patterns based on social condition

hatches = {

True: "///", # Hatched pattern for social

False: None, # No pattern (solid) for solo

}

labels = ["Social-Light", "Social-Dark", "Solo-Light", "Solo-Dark"]

Show code cell source

Hide code cell source

def load_data_from_parquet(

experiment_name: str | None,

period: str | None,

data_type: str,

data_dir: Path,

set_time_index: bool = False,

) -> pd.DataFrame:

"""Loads saved data from parquet files.

Args:

experiment_name (str, optional): Filter by experiment name. If None, load all experiments.

period (str, optional): Filter by period (presocial, social, postsocial). If None, load all periods.

data_type (str): Type of data to load (position, patch, foraging, rfid, sleep, explore)

data_dir (Path): Directory containing parquet files.

set_time_index (bool, optional): If True, set 'time' column as DataFrame index.

Returns:

pd.DataFrame: Combined DataFrame of all matching parquet files.

"""

if not data_dir.exists():

print(f"Directory {data_dir} does not exist. No data files found.")

return pd.DataFrame()

# Create pattern based on filters

pattern = ""

if experiment_name:

pattern += f"{experiment_name}_"

else:

pattern += "*_"

if period:

pattern += f"{period}_"

else:

pattern += "*_"

pattern += f"{data_type}.parquet"

# Find matching files

matching_files = list(data_dir.glob(pattern))

if not matching_files:

print(f"No matching data files found with pattern: {pattern}")

return pd.DataFrame()

print(f"Found {len(matching_files)} matching files")

# Load and concatenate matching files

dfs = []

total_rows = 0

for file in matching_files:

print(f"Loading {file}...")

df = pd.read_parquet(file)

total_rows += len(df)

dfs.append(df)

print(f" Loaded {len(df)} rows")

# Combine data

if dfs:

combined_df = pd.concat(dfs, ignore_index=True)

if set_time_index and "time" in combined_df.columns:

combined_df = combined_df.set_index("time")

print(f"Combined data: {len(combined_df)} rows")

return combined_df

else:

return pd.DataFrame()

def find_first_x_indxs(

dist_forage: np.ndarray, dist_threshold: np.ndarray

) -> np.ndarray:

"""For each value in dist_threshold, find the first index in dist_forage that exceeds this."""

idxs = np.searchsorted(dist_forage, dist_threshold)

idxs = idxs[idxs < len(dist_forage)]

return idxs

def create_patch_name_type_map(block_start, subject_name, patch_df):

# Filter patch_df for this specific block_start and subject_name

relevant_patches = patch_df[

(patch_df["block_start"] == block_start)

& (patch_df["subject_name"] == subject_name)

]

# Initialize the mapping dictionary

patch_name_type_map = {"l": [], "m": [], "h": []}

# Group by patch_type and collect patch_names

for patch_type, group in relevant_patches.groupby("patch_type"):

patch_names = group["patch_name"].unique().tolist()

patch_name_type_map[patch_type] = patch_names

return patch_name_type_map

def pad_array(arr, max_len):

"""Pad a 1D array with NaNs to a specified maximum length."""

return np.pad(arr, (0, max_len - len(arr)), mode="constant", constant_values=np.nan)

def process_preference_data(dataframe, pref_column, cutoff, smooth_window):

"""Extract and process preference data from the specified column."""

# Get arrays of patch preferences

pref_arrays = dataframe[pref_column].values

# Filter out empty arrays and arrays with just one element

pref_arrays = [

arr for arr in pref_arrays if len(arr) > 1

] # Ensure at least 2 elements (to skip 0th)

if not pref_arrays:

return None, None, None

# Apply cutoff and start from 1st index instead of 0th

pref_arrays = [arr[1 : cutoff + 1] for arr in pref_arrays if len(arr) > 1]

# Find the maximum length to pad to

max_len = max(len(arr) for arr in pref_arrays)

# Pad arrays to uniform length

padded_arrays = [

np.pad(arr, (0, max_len - len(arr)), mode="constant", constant_values=np.nan)

for arr in pref_arrays

]

# Create a matrix of preferences

pref_matrix = np.vstack(padded_arrays)

# Smooth each row individually, preserving NaN positions

smoothed_matrix = np.zeros_like(pref_matrix)

for i, row in enumerate(pref_matrix):

if np.any(~np.isnan(row)):

# Create a copy of the row

smoothed_row = row.copy()

# Find valid (non-NaN) indices

valid_mask = ~np.isnan(row)

if np.sum(valid_mask) >= smooth_window:

# Apply smoothing only to valid values, but keep them in original positions

smoothed_row[valid_mask] = uniform_filter1d(

row[valid_mask], size=smooth_window, mode="nearest"

)

smoothed_matrix[i] = smoothed_row

else:

smoothed_matrix[i] = row

# Calculate mean and SEM from smoothed data

mean_pref = np.nanmean(smoothed_matrix, axis=0)

sem_pref = np.nanstd(smoothed_matrix, axis=0) / np.sqrt(

np.sum(~np.isnan(smoothed_matrix), axis=0)

)

# Create normalized x-axis

x_values = np.linspace(0, 1, len(mean_pref))

return x_values, mean_pref, sem_pref

def process_preference_data_with_matrix(dataframe, pref_column, cutoff, smooth_window):

"""Process preference data and return x-values, mean, SEM, and smoothed matrix."""

# Get arrays of patch preferences

pref_arrays = dataframe[pref_column].values

# Filter out empty arrays and arrays with just one element

pref_arrays = [

arr for arr in pref_arrays if len(arr) > 1

] # Ensure at least 2 elements (to skip 0th)

if not pref_arrays:

return None, None, None, None

# Apply cutoff and start from 1st index instead of 0th

pref_arrays = [arr[1 : cutoff + 1] for arr in pref_arrays if len(arr) > 1]

# Find the maximum length to pad to

max_len = max(len(arr) for arr in pref_arrays)

# Pad arrays to uniform length

padded_arrays = [

np.pad(arr, (0, max_len - len(arr)), mode="constant", constant_values=np.nan)

for arr in pref_arrays

]

# Create a matrix of preferences

pref_matrix = np.vstack(padded_arrays)

# Smooth each row individually, preserving NaN positions

smoothed_matrix = np.zeros_like(pref_matrix)

for i, row in enumerate(pref_matrix):

if np.any(~np.isnan(row)):

# Create a copy of the row

smoothed_row = row.copy()

# Find valid (non-NaN) indices

valid_mask = ~np.isnan(row)

if np.sum(valid_mask) >= smooth_window:

# Apply smoothing only to valid values, but keep them in original positions

smoothed_row[valid_mask] = uniform_filter1d(

row[valid_mask], size=smooth_window, mode="nearest"

)

smoothed_matrix[i] = smoothed_row

else:

smoothed_matrix[i] = row

# Calculate mean and SEM from smoothed data

mean_pref = np.nanmean(smoothed_matrix, axis=0)

sem_pref = np.nanstd(smoothed_matrix, axis=0) / np.sqrt(

np.sum(~np.isnan(smoothed_matrix), axis=0)

)

# Create normalized x-axis

x_values = np.linspace(0, 1, len(mean_pref))

return x_values, mean_pref, sem_pref, smoothed_matrix

def count_total_pellets(pel_patch_list):

"""Count total number of pellets in pel_patch list"""

if not isinstance(pel_patch_list, (list, np.ndarray)) or len(pel_patch_list) == 0:

return 0

return len(pel_patch_list)

# CHANGE THIS TO THE PATH WHERE

# YOUR LOCAL DATASET (PARQUET FILES) IS STORED

data_dir = Path("")

Solo vs. social behaviours#

Exploring#

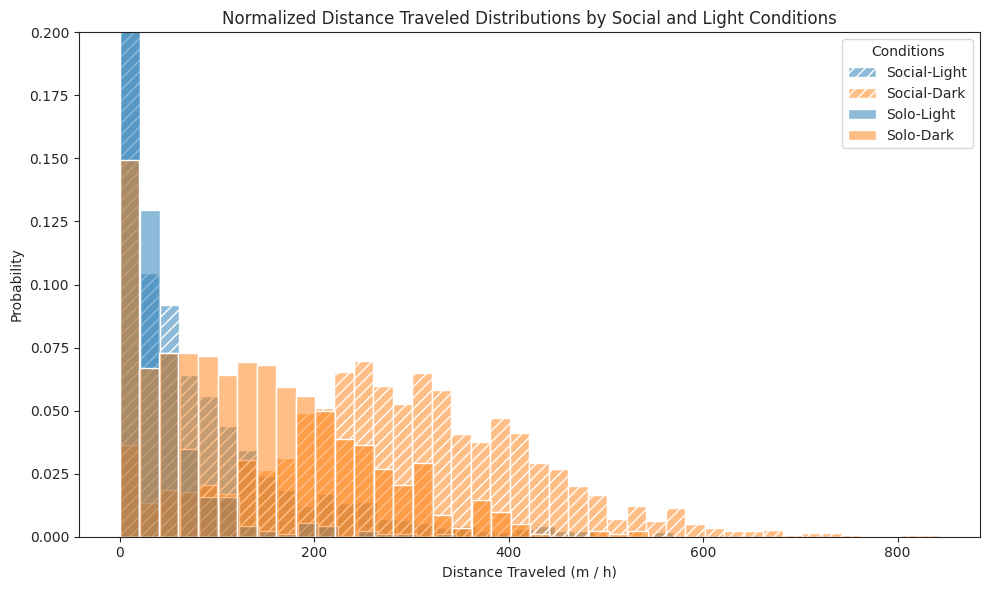

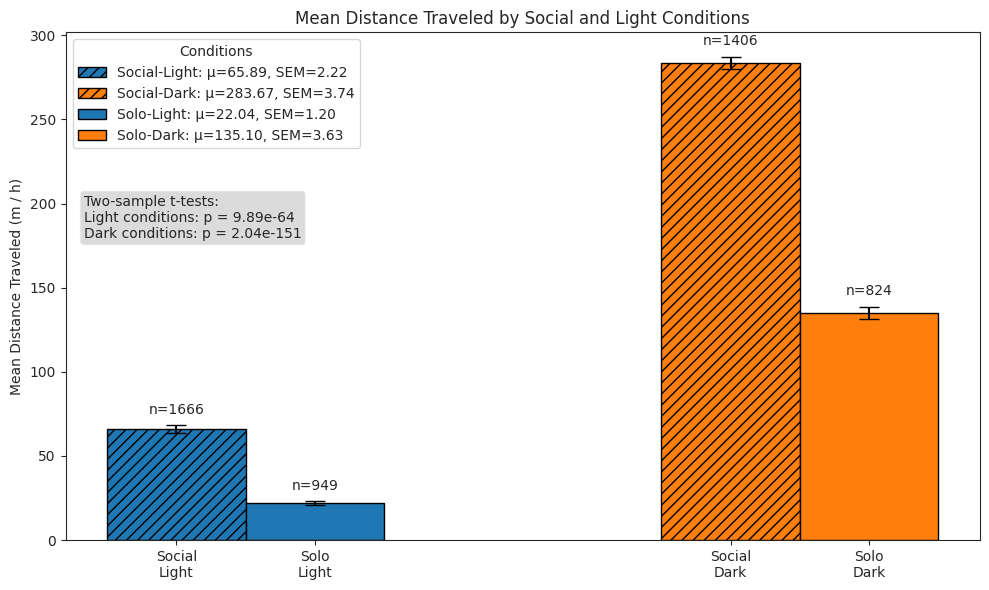

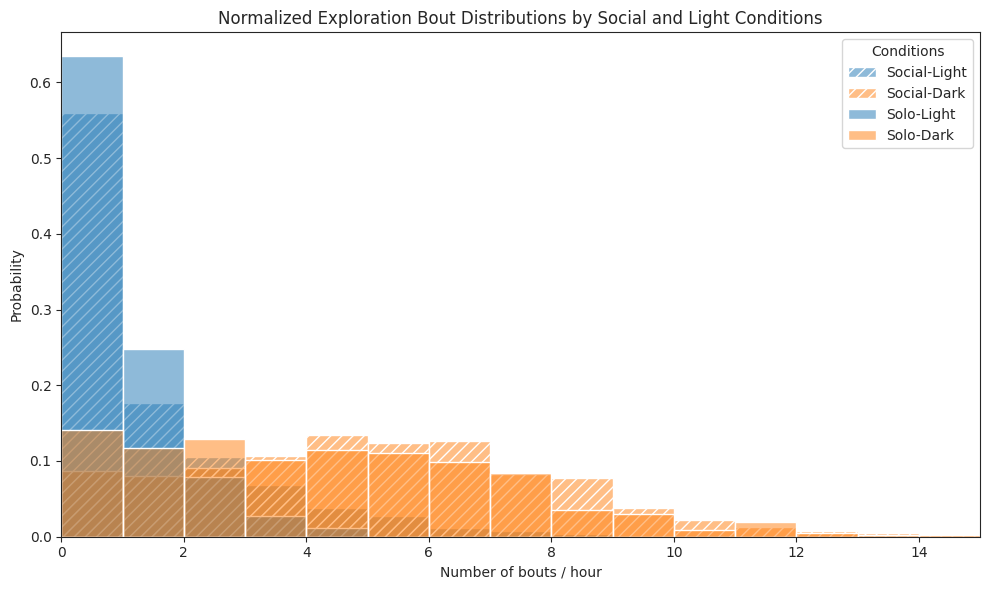

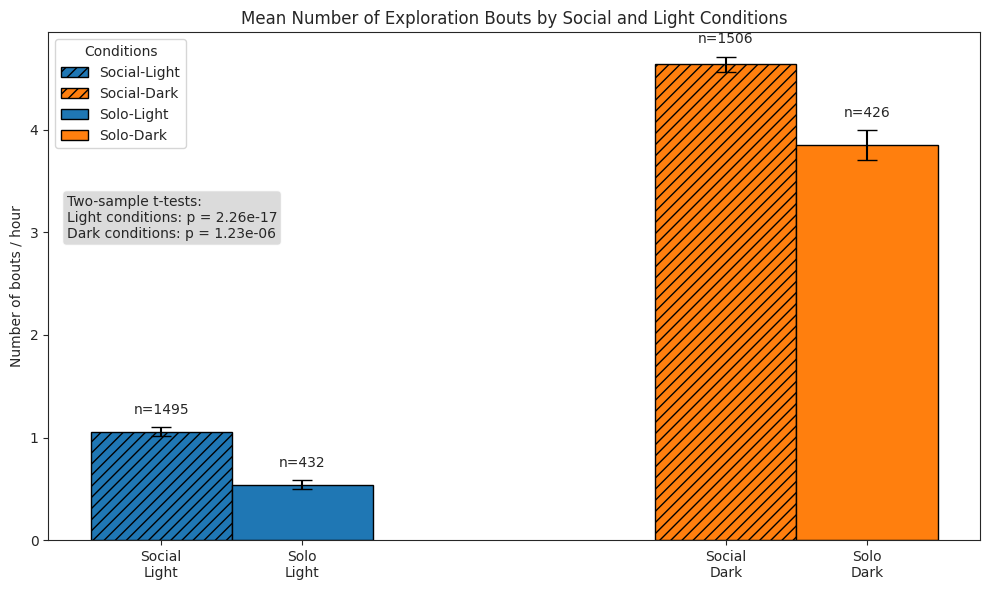

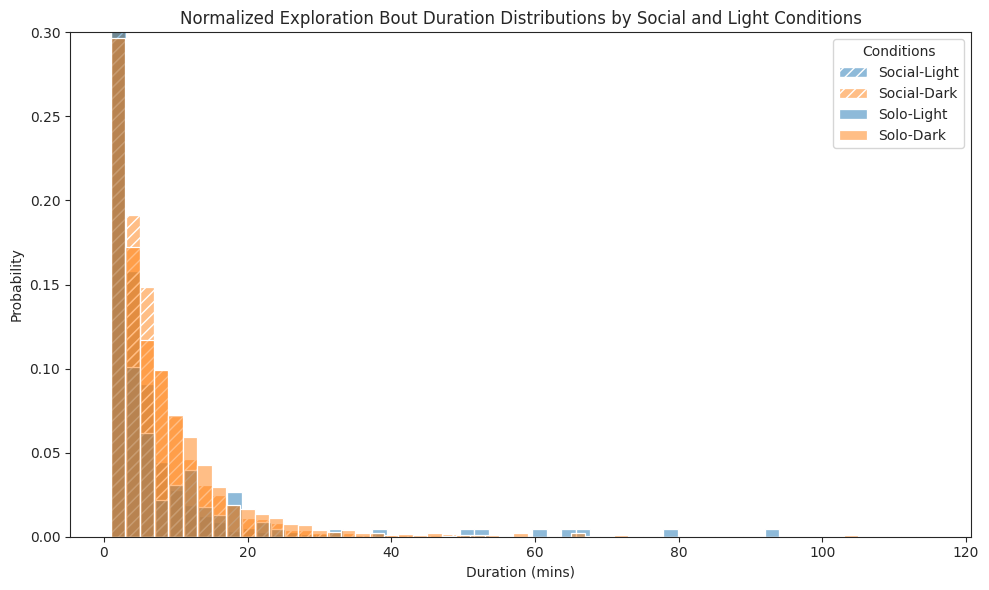

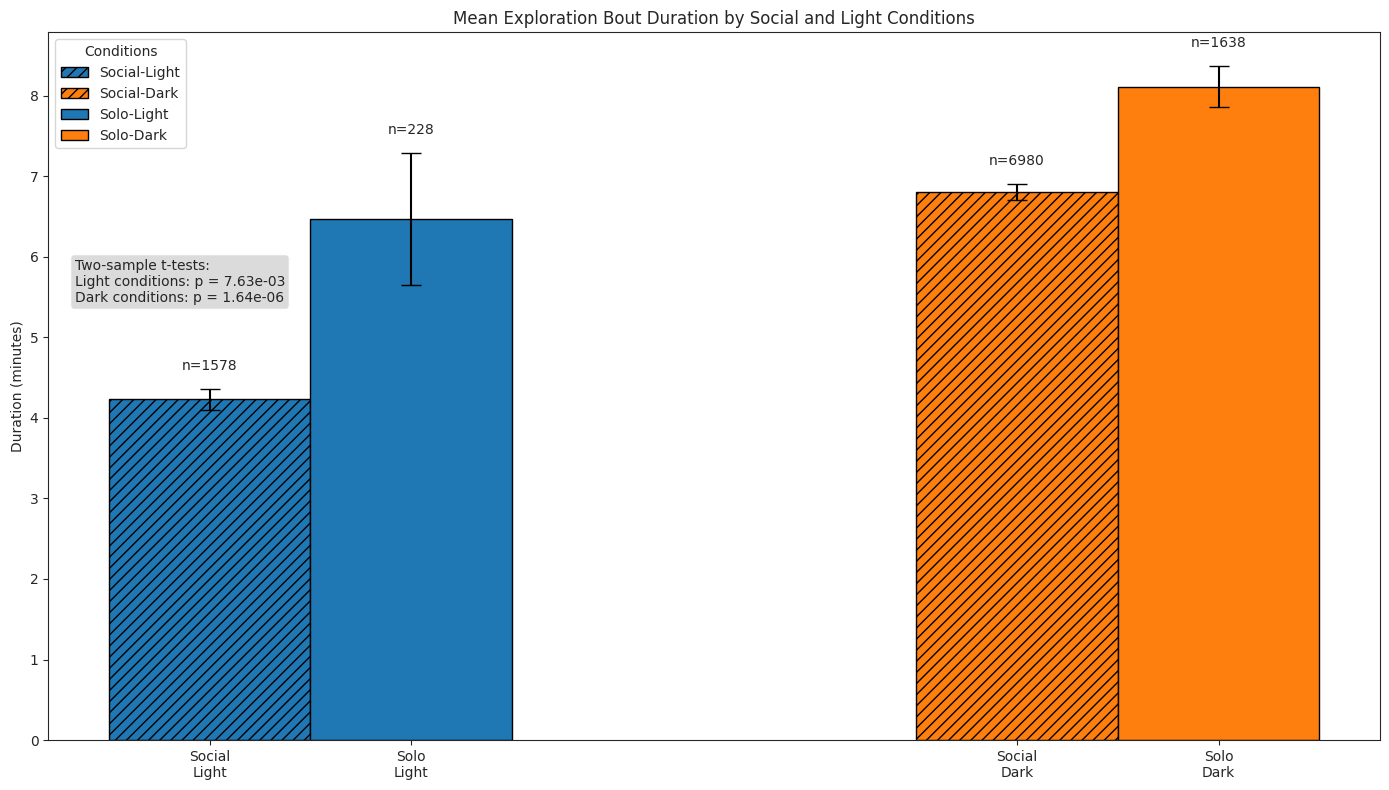

We compare subjects’ exploratory behaviour in solo and social conditions across light and dark cycles, and show that subjects in the social condition exhibit a stronger exploratory drive, covering greater distances.

Distance travelled#

This hidden cell is optional. It performs per-subject hourly distance computations across all experiments and periods based on multi-animal tracking data. As the computations are slow, we have precomputed the results and saved them to Parquet.

Show code cell source

Hide code cell source

# Final df:

# rows = hour-datetime,

# columns = distance, exp, social-bool, subject, light-bool

dist_trav_hour_df = pd.DataFrame(

columns=["hour", "distance", "exp", "social", "subject", "light"]

)

# For each period

# Load pos data

# Split into individual dfs

# If social, excise swaps

# Smooth down to 1s

# Calculate hour-by-hour distance traveled, and put into final df

exp_pbar = tqdm(experiments, desc="Experiments", position=0, leave=True)

for exp in exp_pbar:

period_pbar = tqdm(periods, desc="Periods", position=1, leave=True)

for period in period_pbar:

pos_df = load_data_from_parquet(

experiment_name=exp["name"],

period=period,

data_type="position",

data_dir=data_dir,

set_time_index=True,

)

for subject in pos_df["identity_name"].unique():

pos_df_subj = pos_df[pos_df["identity_name"] == subject]

pos_df_subj = pos_df_subj.resample("200ms").first().dropna(subset=["x"])

pos_df_subj[["x", "y"]] = pos_df_subj[["x", "y"]].rolling("1s").mean()

pos_df_subj = pos_df_subj.resample("1s").first().dropna(subset=["x"])

pos_df_subj["distance"] = np.sqrt(

(pos_df_subj["x"].diff() ** 2) + (pos_df_subj["y"].diff() ** 2)

)

pos_df_subj.at[pos_df_subj.index[0], "distance"] = 0

pos_df_subj["distance"] /= cm2px * 100 # convert to m

pos_df_subj["hour"] = pos_df_subj.index.floor("h")

pos_df_subj_hour = (

pos_df_subj.groupby("hour")["distance"].sum().reset_index()

)

pos_df_subj_hour["exp"] = exp["name"]

pos_df_subj_hour["social"] = period == "social"

pos_df_subj_hour["subject"] = subject

hour = pos_df_subj_hour["hour"].dt.hour

pos_df_subj_hour["light"] = ~((hour > light_off) & (hour < light_on))

dist_trav_hour_df = pd.concat(

[dist_trav_hour_df, pos_df_subj_hour], ignore_index=True

)

# Save as parquet

dist_trav_hour_df.to_parquet(

data_dir / "for_plots" / "dist_trav_hour_df.parquet",

engine="pyarrow",

compression="snappy",

index=False,

)

Here we will load and visualise the precomputed distances from the aforementioned Parquet file.

# Load the parquet file

dist_trav_hour_df = pd.read_parquet(

data_dir / "for_plots" / "dist_trav_hour_df.parquet",

engine="pyarrow",

)

dist_trav_hour_df.head()

| hour | distance | exp | social | subject | light | |

|---|---|---|---|---|---|---|

| 0 | 2024-01-31 11:00:00 | 214.375787 | social0.2-aeon3 | False | BAA-1104045 | False |

| 1 | 2024-01-31 12:00:00 | 358.672416 | social0.2-aeon3 | False | BAA-1104045 | False |

| 2 | 2024-01-31 13:00:00 | 301.952548 | social0.2-aeon3 | False | BAA-1104045 | False |

| 3 | 2024-01-31 14:00:00 | 284.154738 | social0.2-aeon3 | False | BAA-1104045 | False |

| 4 | 2024-01-31 15:00:00 | 420.268372 | social0.2-aeon3 | False | BAA-1104045 | False |

# Plot histograms for each combination

fig, ax = plt.subplots(figsize=(10, 6))

for i, (social_val, light_val) in enumerate(combos):

# Filter data for this combination

subset = dist_trav_hour_df[

(dist_trav_hour_df["social"] == social_val)

& (dist_trav_hour_df["light"] == light_val)

]

# Plot normalized histogram

hist = sns.histplot(

data=subset,

x="distance",

stat="probability", # This normalizes the histogram

alpha=0.5,

color=colors[light_val],

label=labels[i],

# kde=True, # Add kernel density estimate

common_norm=False, # Ensure each histogram is normalized separately

axes=ax,

binwidth=20,

)

# Set hatch pattern for bars

if hatches[social_val]:

for bar in hist.patches:

bar.set_hatch(hatches[social_val])

ax.set_title(

"Normalized Distance Traveled Distributions by Social and Light Conditions"

)

ax.set_xlabel("Distance Traveled (m / h)")

ax.set_ylabel("Probability")

ax.legend(title="Conditions")

ax.set_ylim(0, 0.2)

plt.tight_layout()

plt.show()

# Plot bar plot of means

fig, ax = plt.subplots(figsize=(10, 6))

summary_data = []

for social_val in [True, False]:

for light_val in [True, False]:

subset = dist_trav_hour_df[

(dist_trav_hour_df["social"] == social_val)

& (dist_trav_hour_df["light"] == light_val)

]

mean_dist = subset["distance"].mean()

sem_dist = subset["distance"].sem()

n_samples = len(subset)

summary_data.append(

{

"social": social_val,

"light": light_val,

"mean_distance": mean_dist,

"sem": sem_dist,

"condition": (

f"{'Social' if social_val else 'Solo'}-"

f"{'Light' if light_val else 'Dark'}",

),

"n": n_samples,

}

)

summary_df = pd.DataFrame(summary_data)

# Set up positions for the bars

bar_width = 0.5

x_pos = np.array([0.25, 2.25, 0.75, 2.75]) # create two groups with a gap in the middle

# Plot bars

for i, row in enumerate(summary_data):

pos = x_pos[i]

social_val = row["social"]

light_val = row["light"]

bar = ax.bar(

pos,

row["mean_distance"],

bar_width,

yerr=row["sem"],

color=colors[light_val],

edgecolor="black",

capsize=7,

label=f"{row['condition'][0]}: μ={row['mean_distance']:.2f}, SEM={row['sem']:.2f}",

)

# Apply hatching for social conditions

if hatches[social_val]:

bar[0].set_hatch(hatches[social_val])

# Add sample size as text above each bar

sample_size_txt = ax.text(

pos,

row["mean_distance"] + row["sem"] + 5,

f"n={row['n']}",

ha="center",

va="bottom",

)

ax.set_ylabel("Mean Distance Traveled (m / h)")

ax.set_xticks(x_pos)

ax.set_xticklabels(["Social\nLight", "Social\nDark", "Solo\nLight", "Solo\nDark"])

ax.set_title("Mean Distance Traveled by Social and Light Conditions")

ax.legend(title="Conditions", loc="upper left")

# Add stats tests

light_social = dist_trav_hour_df.query("social and light")["distance"]

light_solo = dist_trav_hour_df.query("not social and light")["distance"]

dark_social = dist_trav_hour_df.query("social and not light")["distance"]

dark_solo = dist_trav_hour_df.query("not social and not light")["distance"]

light_stat, light_p = stats.ttest_ind(

light_social, light_solo, alternative="two-sided", equal_var=False

)

dark_stat, dark_p = stats.ttest_ind(

dark_social, dark_solo, alternative="two-sided", equal_var=False

)

test_text = (

f"Two-sample t-tests:\n"

f"Light conditions: p = {light_p:.2e}\nDark conditions: p = {dark_p:.2e}"

)

props = dict(boxstyle="round,pad=0.3", facecolor="lightgray", alpha=0.8)

ax.text(

0.02,

0.68, # Position below the legend

test_text,

transform=ax.transAxes,

verticalalignment="top",

bbox=props,

)

plt.tight_layout()

plt.show()

Bouts#

# Final df:

# rows = hour-datetime,

# columns = n_bouts, exp, social-bool, subject, light-bool

explore_hour_df = pd.DataFrame(

{

"hour": pd.Series(dtype="datetime64[ns]"),

"n_bouts": pd.Series(dtype="int"),

"exp": pd.Series(dtype="string"),

"social": pd.Series(dtype="bool"),

"subject": pd.Series(dtype="string"),

"light": pd.Series(dtype="bool"),

}

)

explore_dur_df = pd.DataFrame(

{

"start": pd.Series(dtype="datetime64[ns]"),

"duration": pd.Series(dtype="timedelta64[ns]"),

"exp": pd.Series(dtype="string"),

"social": pd.Series(dtype="bool"),

"subject": pd.Series(dtype="string"),

"light": pd.Series(dtype="bool"),

}

)

exp_pbar = tqdm(experiments, desc="Experiments", position=0, leave=True)

for exp in exp_pbar:

period_pbar = tqdm(periods, desc="Periods", position=1, leave=False)

for period in period_pbar:

explore_bouts_df = load_data_from_parquet(

experiment_name=exp["name"],

period=period,

data_type="explore",

data_dir=data_dir,

set_time_index=True,

)

for subject in explore_bouts_df["subject"].unique():

explore_df_subj = explore_bouts_df[explore_bouts_df["subject"] == subject]

explore_df_subj["hour"] = explore_df_subj["start"].dt.floor("h")

min_hour, max_hour = (

explore_df_subj["hour"].min(),

explore_df_subj["hour"].max(),

)

complete_hours = pd.DataFrame(

{"hour": pd.date_range(start=min_hour, end=max_hour, freq="h")}

)

hour_counts = (

explore_df_subj.groupby("hour").size().reset_index(name="n_bouts")

)

explore_df_subj_hour = pd.merge(

complete_hours, hour_counts, on="hour", how="left"

).fillna(0)

explore_df_subj_hour["n_bouts"] = explore_df_subj_hour["n_bouts"].astype(

int

)

explore_df_subj_hour["exp"] = exp["name"]

explore_df_subj_hour["social"] = period == "social"

explore_df_subj_hour["subject"] = subject

hour = explore_df_subj_hour["hour"].dt.hour

explore_df_subj_hour["light"] = ~((hour > light_off) & (hour < light_on))

explore_hour_df = pd.concat(

[explore_hour_df, explore_df_subj_hour], ignore_index=True

)

explore_dur_subj = explore_df_subj[["start", "duration"]].copy()

explore_dur_subj["exp"] = exp["name"]

explore_dur_subj["social"] = period == "social"

explore_dur_subj["subject"] = subject

hour = explore_dur_subj["start"].dt.hour

explore_dur_subj["light"] = ~((hour > light_off) & (hour < light_on))

explore_dur_df = pd.concat(

[explore_dur_df, explore_dur_subj], ignore_index=True

)

explore_dur_df["duration"] = explore_dur_df["duration"].dt.total_seconds() / 60

explore_dur_df = explore_dur_df[explore_dur_df["duration"] < 120]

explore_hour_df.head()

| hour | n_bouts | exp | social | subject | light | |

|---|---|---|---|---|---|---|

| 0 | 2024-02-09 16:00:00 | 8 | social0.2-aeon3 | True | BAA-1104045 | False |

| 1 | 2024-02-09 17:00:00 | 8 | social0.2-aeon3 | True | BAA-1104045 | False |

| 2 | 2024-02-09 18:00:00 | 7 | social0.2-aeon3 | True | BAA-1104045 | False |

| 3 | 2024-02-09 19:00:00 | 4 | social0.2-aeon3 | True | BAA-1104045 | False |

| 4 | 2024-02-09 20:00:00 | 3 | social0.2-aeon3 | True | BAA-1104045 | True |

explore_dur_df.head()

| start | duration | exp | social | subject | light | |

|---|---|---|---|---|---|---|

| 0 | 2024-02-09 16:10:02.960 | 1.0 | social0.2-aeon3 | True | BAA-1104045 | False |

| 1 | 2024-02-09 16:17:02.960 | 1.0 | social0.2-aeon3 | True | BAA-1104045 | False |

| 2 | 2024-02-09 16:19:02.960 | 5.0 | social0.2-aeon3 | True | BAA-1104045 | False |

| 3 | 2024-02-09 16:25:02.960 | 13.0 | social0.2-aeon3 | True | BAA-1104045 | False |

| 4 | 2024-02-09 16:39:02.960 | 1.0 | social0.2-aeon3 | True | BAA-1104045 | False |

# Plot hist of bouts per hour

fig, ax = plt.subplots(figsize=(10, 6))

# Plot histograms for each combination

for i, (social_val, light_val) in enumerate(combos):

subset = explore_hour_df[

(explore_hour_df["social"] == social_val)

& (explore_hour_df["light"] == light_val)

]

# Plot normalized histogram

hist = sns.histplot(

data=subset,

x="n_bouts",

stat="probability",

alpha=0.5,

color=colors[light_val],

label=labels[i],

common_norm=False, # Ensure each histogram is normalized separately

axes=ax,

binwidth=1,

)

# Set hatch pattern for bars

if hatches[social_val]:

for bar in hist.patches:

bar.set_hatch(hatches[social_val])

ax.set_title("Normalized Exploration Bout Distributions by Social and Light Conditions")

ax.set_xlabel("Number of bouts / hour")

ax.set_ylabel("Probability")

ax.legend(title="Conditions")

ax.set_xticks(np.arange(0, 15, 2))

ax.set_xlim(0, 15)

plt.tight_layout()

plt.show()

# Plot bars of bouts per hour

fig, ax = plt.subplots(figsize=(10, 6))

summary_data = []

for social_val in [True, False]:

for light_val in [True, False]:

subset = explore_hour_df[

(explore_hour_df["social"] == social_val)

& (explore_hour_df["light"] == light_val)

]

mean_n_bouts = subset["n_bouts"].mean()

sem_n_bouts = subset["n_bouts"].sem()

n_samples = len(subset)

summary_data.append(

{

"social": social_val,

"light": light_val,

"mean_n_bouts": mean_n_bouts,

"sem": sem_n_bouts,

"condition": f"{'Social' if social_val else 'Solo'}-{'Light' if light_val else 'Dark'}",

"n": n_samples,

}

)

summary_df = pd.DataFrame(summary_data)

# Set up positions for the bars

bar_width = 0.5

x_pos = np.array([0.25, 2.25, 0.75, 2.75]) # create two groups with a gap in the middle

# Plot bars

for i, row in enumerate(summary_data):

pos = x_pos[i]

social_val = row["social"]

light_val = row["light"]

bar = ax.bar(

pos,

row["mean_n_bouts"],

bar_width,

yerr=row["sem"],

color=colors[light_val],

edgecolor="black",

capsize=7,

label=row["condition"],

)

# Apply hatching for social conditions

if hatches[social_val]:

bar[0].set_hatch(hatches[social_val])

# Add sample size as text above each bar

sample_size_txt = ax.text(

pos,

row["mean_n_bouts"] + row["sem"] + 0.1,

f"n={row['n']}",

ha="center",

va="bottom",

)

ax.set_title("Mean Number of Exploration Bouts by Social and Light Conditions")

ax.set_ylabel("Number of bouts / hour")

ax.set_xticks(x_pos)

ax.set_xticklabels(["Social\nLight", "Social\nDark", "Solo\nLight", "Solo\nDark"])

ax.legend(title="Conditions", loc="upper left")

# Perform Wilcoxon rank sum tests (Mann-Whitney U)

light_social = explore_hour_df.query("social and light")["n_bouts"]

light_solo = explore_hour_df.query("not social and light")["n_bouts"]

dark_social = explore_hour_df.query("social and not light")["n_bouts"]

dark_solo = explore_hour_df.query("not social and not light")["n_bouts"]

light_stat, light_p = stats.ttest_ind(

light_social, light_solo, alternative="two-sided", equal_var=False

)

dark_stat, dark_p = stats.ttest_ind(

dark_social, dark_solo, alternative="two-sided", equal_var=False

)

test_text = (

f"Two-sample t-tests:\n"

f"Light conditions: p = {light_p:.2e}\nDark conditions: p = {dark_p:.2e}"

)

props = dict(boxstyle="round,pad=0.3", facecolor="lightgray", alpha=0.8)

ax.text(

0.02,

0.68, # Position below the legend

test_text,

transform=ax.transAxes,

verticalalignment="top",

bbox=props,

)

plt.tight_layout()

plt.show()

# Plot histograms of durations of bouts for each combination

fig, ax = plt.subplots(figsize=(10, 6))

for i, (social_val, light_val) in enumerate(combos):

subset = explore_dur_df[

(explore_dur_df["social"] == social_val)

& (explore_dur_df["light"] == light_val)

]

# Plot normalized histogram

hist = sns.histplot(

data=subset,

x="duration",

stat="probability",

alpha=0.5,

color=colors[light_val],

label=labels[i],

# kde=True, # Add kernel density estimate

common_norm=False, # Ensure each histogram is normalized separately

axes=ax,

binwidth=2,

)

# Set hatch pattern for bars

if hatches[social_val]:

for bar in hist.patches:

bar.set_hatch(hatches[social_val])

ax.set_title(

"Normalized Exploration Bout Duration Distributions by Social and Light Conditions"

)

ax.set_xlabel("Duration (mins)")

ax.set_ylabel("Probability")

ax.legend(title="Conditions")

ax.set_ylim(0, 0.3)

plt.tight_layout()

plt.show()

# Plot bars of durations of bouts

fig, ax = plt.subplots(figsize=(14, 8))

summary_data = []

for social_val in [True, False]:

for light_val in [True, False]:

subset = explore_dur_df[

(explore_dur_df["social"] == social_val)

& (explore_dur_df["light"] == light_val)

]

mean_duration = subset["duration"].mean()

sem_duration = subset["duration"].sem()

n_samples = len(subset)

summary_data.append(

{

"social": social_val,

"light": light_val,

"mean_duration": mean_duration,

"sem": sem_duration,

"condition": f"{'Social' if social_val else 'Solo'}-{'Light' if light_val else 'Dark'}",

"n": n_samples,

}

)

summary_df = pd.DataFrame(summary_data)

# Set up positions for the bars

bar_width = 0.5

x_pos = np.array([0.25, 2.25, 0.75, 2.75]) # create two groups with a gap in the middle

# Plot bars

for i, row in enumerate(summary_data):

pos = x_pos[i]

social_val = row["social"]

light_val = row["light"]

bar = ax.bar(

pos,

row["mean_duration"],

bar_width,

yerr=row["sem"],

color=colors[light_val],

edgecolor="black",

capsize=7,

label=row["condition"],

)

# Apply hatching for social conditions

if hatches[social_val]:

bar[0].set_hatch(hatches[social_val])

# Add sample size as text above each bar

sample_size_txt = ax.text(

pos,

row["mean_duration"] + row["sem"] + 0.2,

f"n={row['n']}",

ha="center",

va="bottom",

)

ax.set_title("Mean Exploration Bout Duration by Social and Light Conditions")

ax.set_ylabel("Duration (minutes)")

ax.set_xticks(x_pos)

ax.set_xticklabels(["Social\nLight", "Social\nDark", "Solo\nLight", "Solo\nDark"])

ax.legend(title="Conditions", loc="upper left")

# Perform Wilcoxon rank sum tests (Mann-Whitney U)

light_social = explore_dur_df.query("social and light")["duration"]

light_solo = explore_dur_df.query("not social and light")["duration"]

dark_social = explore_dur_df.query("social and not light")["duration"]

dark_solo = explore_dur_df.query("not social and not light")["duration"]

light_stat, light_p = stats.ttest_ind(

light_social, light_solo, alternative="two-sided", equal_var=False

)

dark_stat, dark_p = stats.ttest_ind(

dark_social, dark_solo, alternative="two-sided", equal_var=False

)

test_text = (

f"Two-sample t-tests:\n"

f"Light conditions: p = {light_p:.2e}\nDark conditions: p = {dark_p:.2e}"

)

props = dict(boxstyle="round,pad=0.3", facecolor="lightgray", alpha=0.8)

ax.text(

0.02,

0.68, # Position below the legend

test_text,

transform=ax.transAxes,

verticalalignment="top",

bbox=props,

)

plt.tight_layout()

plt.show()

# Plot histograms of times of bouts over all hours

fig, ax = plt.subplots(figsize=(14, 8))

for i, social_val in enumerate([True, False]):

subset = explore_dur_df[(explore_dur_df["social"] == social_val)]

# Create the histogram

hist = sns.histplot(

data=subset,

x=subset["start"].dt.hour,

stat="probability", # Normalize to show probability

alpha=0.5,

color="teal",

label="Social" if social_val else "Solo",

common_norm=False, # Each condition normalized separately

ax=ax,

bins=24, # 24 hours

discrete=True, # Since hours are discrete values

)

# Apply hatching pattern for social conditions

if hatches[social_val]:

# Apply the hatch pattern to each bar

for patch in hist.patches:

patch.set_hatch(hatches[social_val])

# Set x-tick labels for every hour

ax.set_xticks(range(0, 24))

ax.set_xticklabels([f"{h:02d}:00" for h in range(0, 24)], rotation=45)

# Customize axis labels and title

ax.set_title("Distribution of Exploration Bouts Throughout the Day")

ax.set_xlabel("Hour of Day")

ax.set_ylabel("Probability")

ax.legend(title="Conditions")

plt.tight_layout()

plt.show()

Foraging#

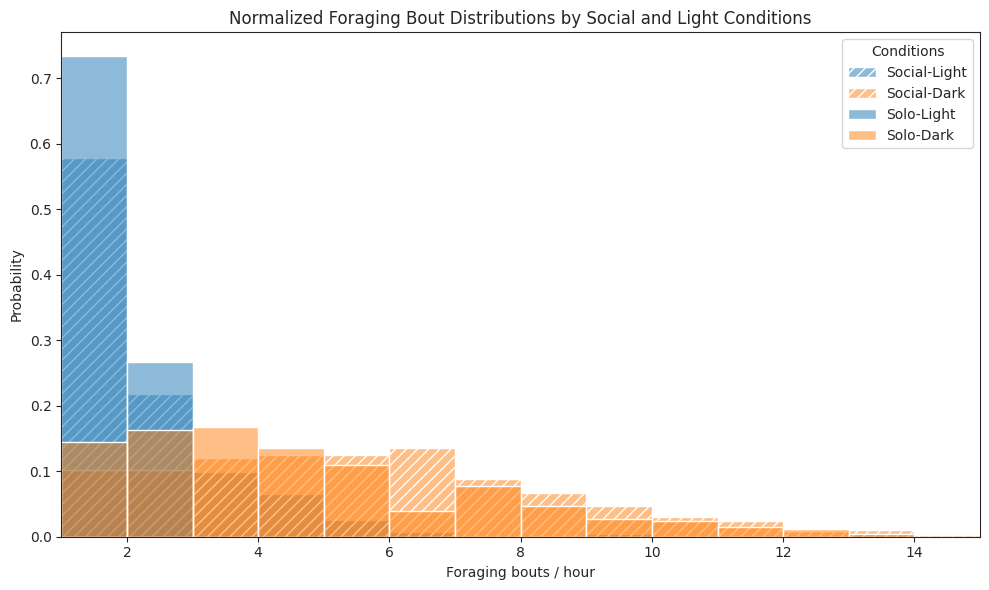

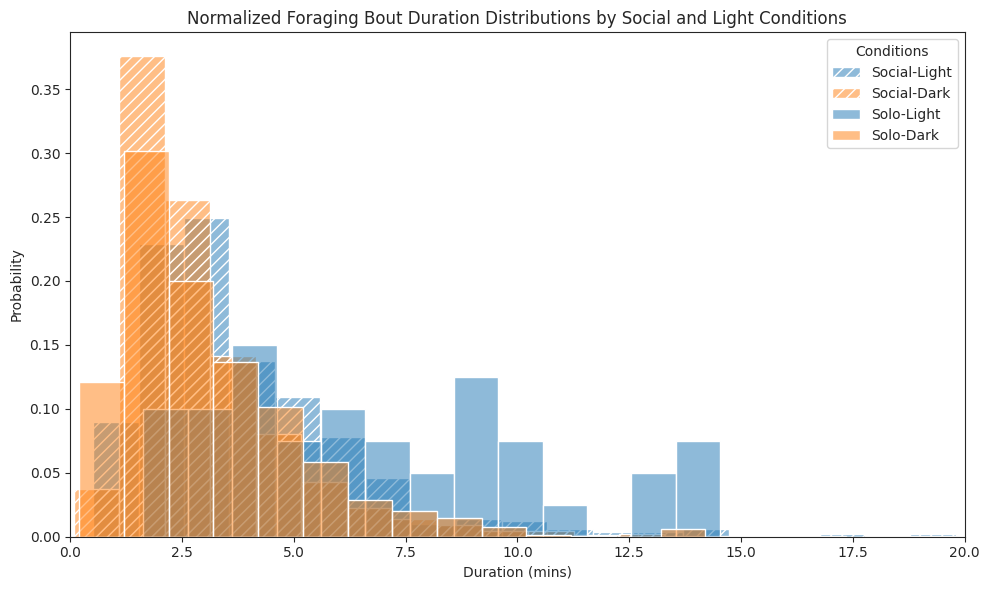

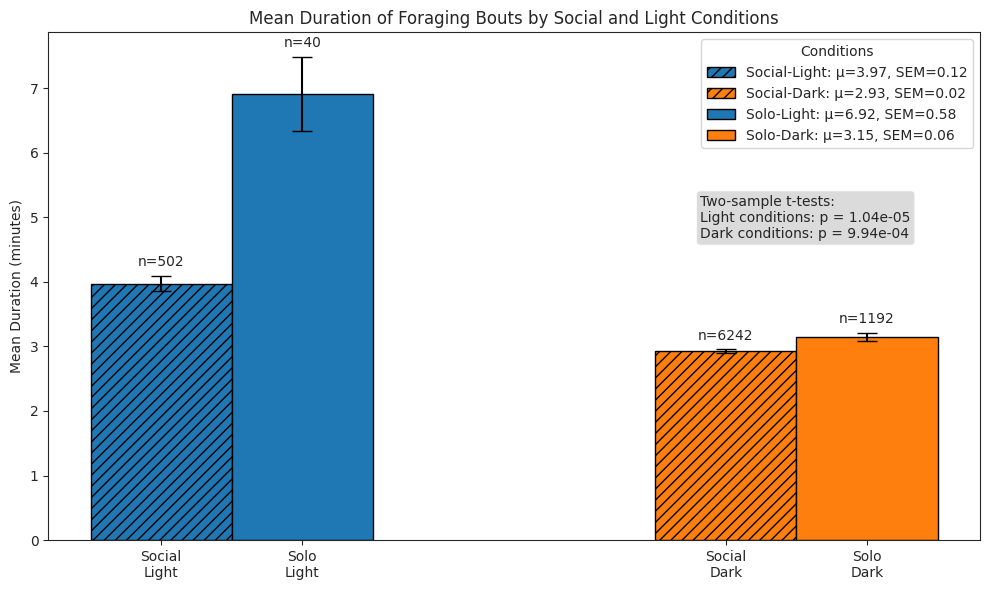

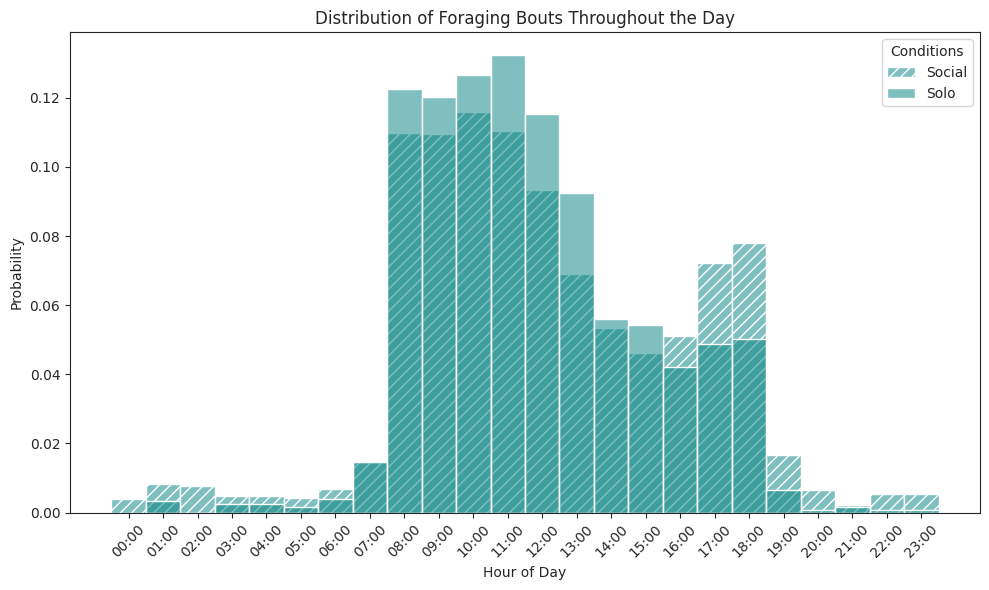

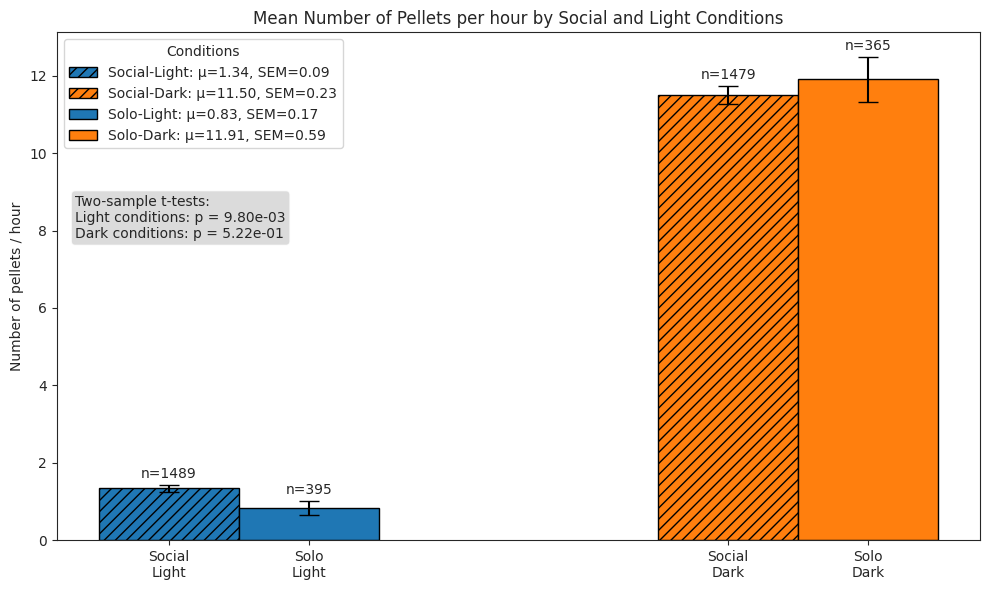

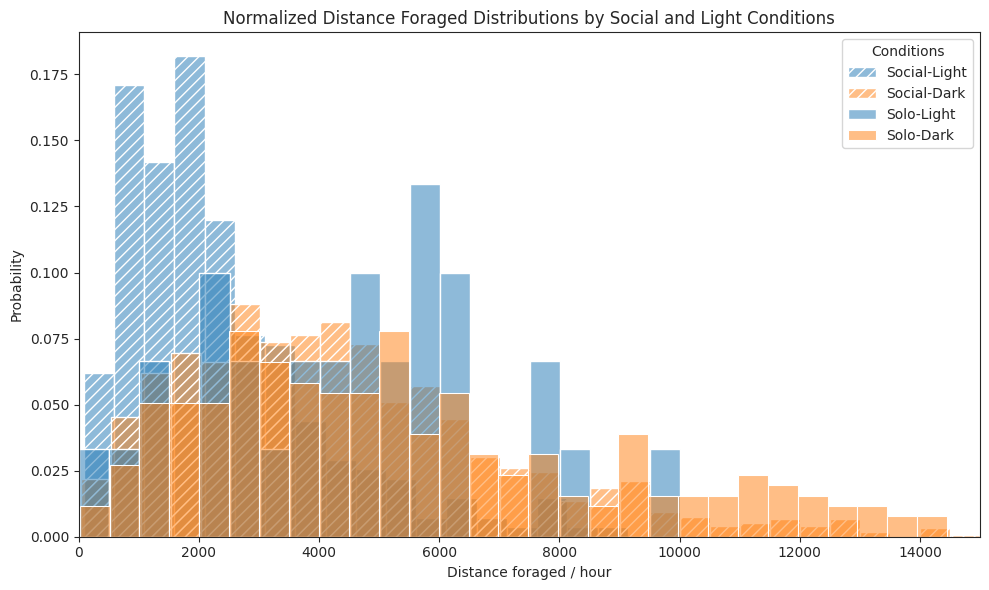

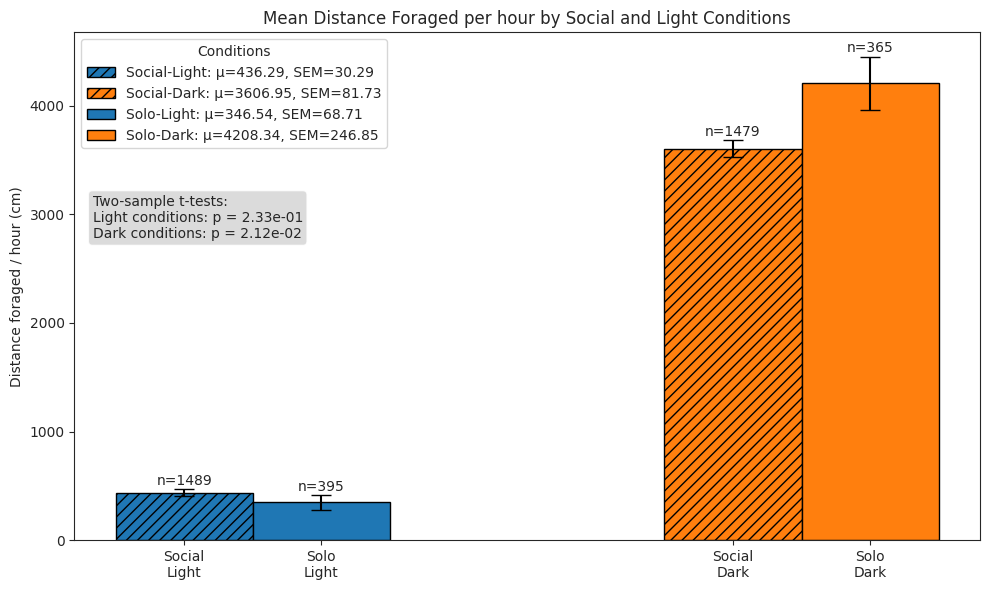

We compare subjects’ foraging behaviour in solo and social conditions across light and dark cycles, and show that subjects in the social condition engaged in more frequent but shorter foraging bouts, yielding a higher total pellet count than in the solo condition.

Notably, despite the increaed activity and pellet yield, the total distance spun on the foraging wheel was lower in the social condition, reflecting a shift towards more efficient foraging under social conditions.

# Final dfs:

# 1. forage_hour_df: hour, n_pellets, dist_forage, n_bouts, exp, social-bool, subject, light-bool

# 2. forage_dur_df: start, duration(mins), exp, social-bool, subject, light-bool

forage_hour_df = pd.DataFrame(

{

"hour": pd.Series(dtype="datetime64[ns]"),

"n_bouts": pd.Series(dtype="int"),

"n_pellets": pd.Series(dtype="int"),

"dist_forage": pd.Series(dtype="float"),

"exp": pd.Series(dtype="string"),

"social": pd.Series(dtype="bool"),

"subject": pd.Series(dtype="string"),

"light": pd.Series(dtype="bool"),

}

)

forage_dur_df = pd.DataFrame(

{

"start": pd.Series(dtype="datetime64[ns]"),

"duration": pd.Series(dtype="float"), # in minutes

"exp": pd.Series(dtype="string"),

"social": pd.Series(dtype="bool"),

"subject": pd.Series(dtype="string"),

"light": pd.Series(dtype="bool"),

}

)

# For each period

# Load foraging data

# Split into individual dfs

# Calculate hour-by-hour metrics and put into final df

exp_pbar = tqdm(experiments, desc="Experiments", position=0, leave=True)

for exp in exp_pbar:

period_pbar = tqdm(periods, desc="Periods", position=1, leave=False)

for period in period_pbar:

forage_df = load_data_from_parquet(

experiment_name=exp["name"],

period=period,

data_type="foraging",

data_dir=data_dir,

set_time_index=True,

)

for subject in forage_df["subject"].unique():

forage_df_subj = forage_df[forage_df["subject"] == subject]

forage_df_subj["hour"] = forage_df_subj["start"].dt.floor("h")

hour_counts = pd.merge(

forage_df_subj.groupby("hour").size().reset_index(name="n_bouts"),

forage_df_subj.groupby("hour").agg(

n_pellets=("n_pellets", "sum"),

cum_wheel_dist=("cum_wheel_dist", "sum"),

),

on="hour",

how="left",

)

min_hour, max_hour = (

forage_df_subj["hour"].min(),

forage_df_subj["hour"].max(),

)

complete_hours = pd.DataFrame(

{"hour": pd.date_range(start=min_hour, end=max_hour, freq="h")}

)

forage_df_subj_hour = pd.merge(

complete_hours, hour_counts, on="hour", how="left"

).fillna(0)

forage_df_subj_hour["n_bouts"] = forage_df_subj_hour["n_bouts"].astype(int)

# Rename 'cum_wheel_dist' col

forage_df_subj_hour.rename(

columns={"cum_wheel_dist": "dist_forage"}, inplace=True

)

forage_df_subj_hour["exp"] = exp["name"]

forage_df_subj_hour["social"] = period == "social"

forage_df_subj_hour["subject"] = subject

hour = forage_df_subj_hour["hour"].dt.hour

forage_df_subj_hour["light"] = ~((hour > light_off) & (hour < light_on))

forage_hour_df = pd.concat(

[forage_hour_df, forage_df_subj_hour], ignore_index=True

)

forage_dur_subj = forage_df_subj[["start"]].copy()

forage_dur_subj["duration"] = (

forage_df_subj["end"] - forage_df_subj["start"]

).dt.total_seconds() / 60

forage_dur_subj["exp"] = exp["name"]

forage_dur_subj["social"] = period == "social"

forage_dur_subj["subject"] = subject

hour = forage_df_subj["start"].dt.hour

forage_dur_subj["light"] = ~((hour > light_off) & (hour < light_on))

forage_dur_df = pd.concat(

[forage_dur_df, forage_dur_subj], ignore_index=True

)

forage_hour_df.head()

| hour | n_bouts | n_pellets | dist_forage | exp | social | subject | light | |

|---|---|---|---|---|---|---|---|---|

| 0 | 2024-02-09 17:00:00 | 2 | 3.0 | 2166.585576 | social0.2-aeon3 | True | BAA-1104045 | False |

| 1 | 2024-02-09 18:00:00 | 5 | 9.0 | 7640.816624 | social0.2-aeon3 | True | BAA-1104045 | False |

| 2 | 2024-02-09 19:00:00 | 2 | 7.0 | 4032.787109 | social0.2-aeon3 | True | BAA-1104045 | False |

| 3 | 2024-02-09 20:00:00 | 3 | 4.0 | 1939.771139 | social0.2-aeon3 | True | BAA-1104045 | True |

| 4 | 2024-02-09 21:00:00 | 0 | 0.0 | 0.000000 | social0.2-aeon3 | True | BAA-1104045 | True |

forage_dur_df.head()

| start | duration | exp | social | subject | light | |

|---|---|---|---|---|---|---|

| 0 | 2024-02-09 17:52:33.300 | 2.521333 | social0.2-aeon3 | True | BAA-1104045 | False |

| 1 | 2024-02-09 17:59:05.520 | 3.124333 | social0.2-aeon3 | True | BAA-1104045 | False |

| 2 | 2024-02-09 18:07:29.060 | 1.892333 | social0.2-aeon3 | True | BAA-1104045 | False |

| 3 | 2024-02-09 18:17:54.320 | 3.776333 | social0.2-aeon3 | True | BAA-1104045 | False |

| 4 | 2024-02-09 18:38:15.980 | 5.895667 | social0.2-aeon3 | True | BAA-1104045 | False |

# Plot foraging bouts per hour histogram for each combination

fig, ax = plt.subplots(figsize=(10, 6))

for i, (social_val, light_val) in enumerate(combos):

subset = forage_hour_df[

(forage_hour_df["social"] == social_val)

& (forage_hour_df["light"] == light_val)

& (forage_hour_df["n_pellets"] > 0)

]

# Plot normalized histogram

hist = sns.histplot(

data=subset,

x="n_bouts",

stat="probability",

alpha=0.5,

color=colors[light_val],

label=labels[i],

# kde=True, # Add kernel density estimate

common_norm=False, # Ensure each histogram is normalized separately

axes=ax,

binwidth=1,

)

# Set hatch pattern for bars

if hatches[social_val]:

for bar in hist.patches:

bar.set_hatch(hatches[social_val])

ax.set_title("Normalized Foraging Bout Distributions by Social and Light Conditions")

ax.set_xlabel("Foraging bouts / hour")

ax.set_ylabel("Probability")

ax.legend(title="Conditions")

ax.set_xlim(1, 15)

plt.tight_layout()

plt.show()

# Plot foraging bouts per hour bars

fig, ax = plt.subplots(figsize=(10, 6))

summary_data = []

for social_val in [True, False]:

for light_val in [True, False]:

subset = forage_hour_df[

(forage_hour_df["social"] == social_val)

& (forage_hour_df["light"] == light_val)

]

mean_n_bouts = subset["n_bouts"].mean()

sem_n_bouts = subset["n_bouts"].sem()

n_samples = len(subset)

summary_data.append(

{

"social": social_val,

"light": light_val,

"mean_n_bouts": mean_n_bouts,

"sem": sem_n_bouts,

"condition": f"{'Social' if social_val else 'Solo'}-{'Light' if light_val else 'Dark'}",

"n": n_samples,

}

)

summary_df = pd.DataFrame(summary_data)

# Set up positions for the bars

bar_width = 0.5

x_pos = np.array([0.25, 2.25, 0.75, 2.75]) # create two groups with a gap in the middle

# Plot bars

for i, row in enumerate(summary_data):

pos = x_pos[i]

social_val = row["social"]

light_val = row["light"]

bar = ax.bar(

pos,

row["mean_n_bouts"],

bar_width,

yerr=row["sem"],

color=colors[light_val],

edgecolor="black",

capsize=7,

label=f"{row['condition']}: μ={row['mean_n_bouts']:.2f}, SEM={row['sem']:.2f}",

)

# Apply hatching for social conditions

if hatches[social_val]:

bar[0].set_hatch(hatches[social_val])

# Add sample size as text above each bar

sample_size_txt = ax.text(

pos,

row["mean_n_bouts"] + row["sem"] + 0.1,

f"n={row['n']}",

ha="center",

va="bottom",

)

ax.set_title("Mean Number of Foraging Bouts per Hour by Social and Light Conditions")

ax.set_ylabel("Number of bouts / hour")

ax.set_xticks(x_pos)

ax.set_xticklabels(["Social\nLight", "Social\nDark", "Solo\nLight", "Solo\nDark"])

ax.legend(title="Conditions", loc="upper left")

# Wilcoxon rank sum tests

light_social = forage_hour_df.query("social and light")["n_bouts"]

light_solo = forage_hour_df.query("not social and light")["n_bouts"]

dark_social = forage_hour_df.query("social and not light")["n_bouts"]

dark_solo = forage_hour_df.query("not social and not light")["n_bouts"]

light_stat, light_p = stats.ttest_ind(

light_social, light_solo, alternative="two-sided", equal_var=False

)

dark_stat, dark_p = stats.ttest_ind(

dark_social, dark_solo, alternative="two-sided", equal_var=False

)

test_text = (

f"Two-sample t-tests:\n"

f"Light conditions: p = {light_p:.2e}\nDark conditions: p = {dark_p:.2e}"

)

props = dict(boxstyle="round,pad=0.3", facecolor="lightgray", alpha=0.8)

ax.text(

0.02,

0.68,

test_text,

transform=ax.transAxes,

verticalalignment="top",

bbox=props,

)

plt.tight_layout()

plt.show()

# Plot foraging bouts duration histogram for each combination

fig, ax = plt.subplots(figsize=(10, 6))

for i, (social_val, light_val) in enumerate(combos):

subset = forage_dur_df[

(forage_dur_df["social"] == social_val) & (forage_dur_df["light"] == light_val)

]

# Plot normalized histogram

hist = sns.histplot(

data=subset,

x="duration",

stat="probability",

alpha=0.5,

color=colors[light_val],

label=labels[i],

# kde=True, # Add kernel density estimate

common_norm=False, # Ensure each histogram is normalized separately

axes=ax,

binwidth=1,

)

# Set hatch pattern for bars

if hatches[social_val]:

for bar in hist.patches:

bar.set_hatch(hatches[social_val])

ax.set_title(

"Normalized Foraging Bout Duration Distributions by Social and Light Conditions"

)

ax.set_xlabel("Duration (mins)")

ax.set_ylabel("Probability")

ax.legend(title="Conditions")

ax.set_xlim(0, 20)

plt.tight_layout()

plt.show()

# Plot foraging bouts duration bars

max_forage_thresh = 30 # in minutes

fig, ax = plt.subplots(figsize=(10, 6))

summary_data = []

for social_val in [True, False]:

for light_val in [True, False]:

subset = forage_dur_df[

(forage_dur_df["social"] == social_val)

& (forage_dur_df["light"] == light_val)

& (forage_dur_df["duration"] < max_forage_thresh)

]

mean_duration = subset["duration"].mean()

sem_duration = subset["duration"].sem()

n_samples = len(subset)

summary_data.append(

{

"social": social_val,

"light": light_val,

"mean_duration": mean_duration,

"sem": sem_duration,

"condition": f"{'Social' if social_val else 'Solo'}-{'Light' if light_val else 'Dark'}",

"n": n_samples,

}

)

summary_df = pd.DataFrame(summary_data)

# Set up positions for the bars

bar_width = 0.5

x_pos = np.array([0.25, 2.25, 0.75, 2.75]) # create two groups with a gap in the middle

# Plot bars

for i, row in enumerate(summary_data):

pos = x_pos[i]

social_val = row["social"]

light_val = row["light"]

bar = ax.bar(

pos,

row["mean_duration"],

bar_width,

yerr=row["sem"],

color=colors[light_val],

edgecolor="black",

capsize=7,

label=f"{row['condition']}: μ={row['mean_duration']:.2f}, SEM={row['sem']:.2f}",

)

# Apply hatching for social conditions

if hatches[social_val]:

bar[0].set_hatch(hatches[social_val])

# Add sample size as text above each bar

sample_size_txt = ax.text(

pos,

row["mean_duration"] + row["sem"] + 0.1,

f"n={row['n']}",

ha="center",

va="bottom",

)

ax.set_title("Mean Duration of Foraging Bouts by Social and Light Conditions")

ax.set_ylabel("Mean Duration (minutes)")

ax.set_xticks(x_pos)

ax.set_xticklabels(["Social\nLight", "Social\nDark", "Solo\nLight", "Solo\nDark"])

ax.legend(title="Conditions", loc="upper right")

# Wilcoxon rank sum tests

light_social = forage_dur_df.query(

"social and light and duration < @max_forage_thresh"

)["duration"]

light_solo = forage_dur_df.query(

"not social and light and duration < @max_forage_thresh"

)["duration"]

dark_social = forage_dur_df.query(

"social and not light and duration < @max_forage_thresh"

)["duration"]

dark_solo = forage_dur_df.query(

"not social and not light and duration < @max_forage_thresh"

)["duration"]

light_stat, light_p = stats.ttest_ind(

light_social, light_solo, alternative="two-sided", equal_var=False

)

dark_stat, dark_p = stats.ttest_ind(

dark_social, dark_solo, alternative="two-sided", equal_var=False

)

test_text = (

f"Two-sample t-tests:\n"

f"Light conditions: p = {light_p:.2e}\nDark conditions: p = {dark_p:.2e}"

)

props = dict(boxstyle="round,pad=0.3", facecolor="lightgray", alpha=0.8)

ax.text(

0.70,

0.68,

test_text,

transform=ax.transAxes,

verticalalignment="top",

bbox=props,

)

plt.tight_layout()

plt.show()

# Plot foraging bouts over all hours histogram

fig, ax = plt.subplots(figsize=(10, 6))

for i, social_val in enumerate([True, False]):

subset = forage_dur_df[(forage_dur_df["social"] == social_val)]

# Create the histogram

hist = sns.histplot(

data=subset,

x=subset["start"].dt.hour,

stat="probability", # Normalize to show probability

alpha=0.5,

color="teal",

label="Social" if social_val else "Solo",

common_norm=False, # Each condition normalized separately

ax=ax,

bins=24, # 24 hours

discrete=True, # Since hours are discrete values

)

# Apply hatching pattern for social conditions

if hatches[social_val]:

# Apply the hatch pattern to each bar

for patch in hist.patches:

patch.set_hatch(hatches[social_val])

# Set x-tick labels for every hour

ax.set_xticks(range(0, 24))

ax.set_xticklabels([f"{h:02d}:00" for h in range(0, 24)], rotation=45)

# Customize axis labels and title

ax.set_title("Distribution of Foraging Bouts Throughout the Day")

ax.set_xlabel("Hour of Day")

ax.set_ylabel("Probability")

ax.legend(title="Conditions")

plt.tight_layout()

plt.show()

# Plot pellet rate per hour histograms for each combination

fig, ax = plt.subplots(figsize=(10, 6))

for i, (social_val, light_val) in enumerate(combos):

subset = forage_hour_df[

(forage_hour_df["social"] == social_val)

& (forage_hour_df["light"] == light_val)

& (forage_hour_df["n_pellets"] > 0)

]

# Plot normalized histogram

hist = sns.histplot(

data=subset,

x="n_pellets",

stat="probability",

alpha=0.5,

color=colors[light_val],

label=labels[i],

# kde=True, # Add kernel density estimate

common_norm=False, # Ensure each histogram is normalized separately

axes=ax,

binwidth=1,

)

# Set hatch pattern for bars

if hatches[social_val]:

for bar in hist.patches:

bar.set_hatch(hatches[social_val])

ax.set_title("Normalized Pellet Rate Distributions by Social and Light Conditions")

ax.set_xlabel("Number of pellets / hour")

ax.set_ylabel("Probability")

ax.legend(title="Conditions")

ax.set_xlim(3, 35)

plt.tight_layout()

plt.show()

# Plot pellet rate per hour bars for each combination

fig, ax = plt.subplots(figsize=(10, 6))

summary_data = []

for social_val in [True, False]:

for light_val in [True, False]:

subset = forage_hour_df[

(forage_hour_df["social"] == social_val)

& (forage_hour_df["light"] == light_val)

]

mean_n_pellets = subset["n_pellets"].mean()

sem_n_pellets = subset["n_pellets"].sem()

n_samples = len(subset)

summary_data.append(

{

"social": social_val,

"light": light_val,

"mean_n_pellets": mean_n_pellets,

"sem": sem_n_pellets,

"condition": f"{'Social' if social_val else 'Solo'}-{'Light' if light_val else 'Dark'}",

"n": n_samples,

}

)

summary_df = pd.DataFrame(summary_data)

# Set up positions for the bars

bar_width = 0.5

x_pos = np.array([0.25, 2.25, 0.75, 2.75]) # create two groups with a gap in the middle

# Plot bars

for i, row in enumerate(summary_data):

pos = x_pos[i]

social_val = row["social"]

light_val = row["light"]

bar = ax.bar(

pos,

row["mean_n_pellets"],

bar_width,

yerr=row["sem"],

color=colors[light_val],

edgecolor="black",

capsize=7,

label=f"{row['condition']}: μ={row['mean_n_pellets']:.2f}, SEM={row['sem']:.2f}",

)

# Apply hatching for social conditions

if hatches[social_val]:

bar[0].set_hatch(hatches[social_val])

# Add sample size as text above each bar

sample_size_txt = ax.text(

pos,

row["mean_n_pellets"] + row["sem"] + 0.1,

f"n={row['n']}",

ha="center",

va="bottom",

)

ax.set_title("Mean Number of Pellets per hour by Social and Light Conditions")

ax.set_ylabel("Number of pellets / hour")

ax.set_xticks(x_pos)

ax.set_xticklabels(["Social\nLight", "Social\nDark", "Solo\nLight", "Solo\nDark"])

ax.legend(title="Conditions", loc="upper left")

light_social = forage_hour_df.query("social and light")["n_pellets"]

light_solo = forage_hour_df.query("not social and light")["n_pellets"]

dark_social = forage_hour_df.query("social and not light")["n_pellets"]

dark_solo = forage_hour_df.query("not social and not light")["n_pellets"]

light_stat, light_p = stats.ttest_ind(

light_social, light_solo, alternative="two-sided", equal_var=False

)

dark_stat, dark_p = stats.ttest_ind(

dark_social, dark_solo, alternative="two-sided", equal_var=False

)

test_text = (

f"Two-sample t-tests:\n"

f"Light conditions: p = {light_p:.2e}\nDark conditions: p = {dark_p:.2e}"

)

props = dict(boxstyle="round,pad=0.3", facecolor="lightgray", alpha=0.8)

ax.text(

0.02,

0.68, # Position below the legend (since legend is upper left)

test_text,

transform=ax.transAxes,

verticalalignment="top",

bbox=props,

)

plt.tight_layout()

plt.show()

# Plot distance foraged rate per hour histograms for each combination

fig, ax = plt.subplots(figsize=(10, 6))

for i, (social_val, light_val) in enumerate(combos):

subset = forage_hour_df[

(forage_hour_df["social"] == social_val)

& (forage_hour_df["light"] == light_val)

& (forage_hour_df["n_pellets"] > 0)

]

# Plot normalized histogram

hist = sns.histplot(

data=subset,

x="dist_forage",

stat="probability",

alpha=0.5,

color=colors[light_val],

label=labels[i],

# kde=True, # Add kernel density estimate

common_norm=False, # Ensure each histogram is normalized separately

axes=ax,

binwidth=500,

)

# Set hatch pattern for bars

if hatches[social_val]:

for bar in hist.patches:

bar.set_hatch(hatches[social_val])

ax.set_title("Normalized Distance Foraged Distributions by Social and Light Conditions")

ax.set_xlabel("Distance foraged / hour")

ax.set_ylabel("Probability")

ax.legend(title="Conditions")

ax.set_xlim(0, 15000)

plt.tight_layout()

plt.show()

# Plot distance foraged rate per hour bars

fig, ax = plt.subplots(figsize=(10, 6))

summary_data = []

for social_val in [True, False]:

for light_val in [True, False]:

subset = forage_hour_df[

(forage_hour_df["social"] == social_val)

& (forage_hour_df["light"] == light_val)

]

mean_dist_forage = subset["dist_forage"].mean()

sem_dist_forage = subset["dist_forage"].sem()

n_samples = len(subset)

summary_data.append(

{

"social": social_val,

"light": light_val,

"mean_dist_forage": mean_dist_forage,

"sem": sem_dist_forage,

"condition": f"{'Social' if social_val else 'Solo'}-{'Light' if light_val else 'Dark'}",

"n": n_samples,

}

)

summary_df = pd.DataFrame(summary_data)

# Set up positions for the bars

bar_width = 0.5

x_pos = np.array([0.25, 2.25, 0.75, 2.75]) # create two groups with a gap in the middle

# Plot bars

for i, row in enumerate(summary_data):

pos = x_pos[i]

social_val = row["social"]

light_val = row["light"]

bar = ax.bar(

pos,

row["mean_dist_forage"],

bar_width,

yerr=row["sem"],

color=colors[light_val],

edgecolor="black",

capsize=7,

label=f"{row['condition']}: μ={row['mean_dist_forage']:.2f}, SEM={row['sem']:.2f}",

)

# Apply hatching for social conditions

if hatches[social_val]:

bar[0].set_hatch(hatches[social_val])

# Add sample size as text above each bar

sample_size_txt = ax.text(

pos,

row["mean_dist_forage"] + row["sem"] + 10,

f"n={row['n']}",

ha="center",

va="bottom",

)

ax.set_title("Mean Distance Foraged per hour by Social and Light Conditions")

ax.set_ylabel("Distance foraged / hour (cm)")

ax.set_xticks(x_pos)

ax.set_xticklabels(["Social\nLight", "Social\nDark", "Solo\nLight", "Solo\nDark"])

ax.legend(title="Conditions", loc="upper left")

# Wilcoxon rank sum tests

light_social = forage_hour_df.query("social and light")["dist_forage"]

light_solo = forage_hour_df.query("not social and light")["dist_forage"]

dark_social = forage_hour_df.query("social and not light")["dist_forage"]

dark_solo = forage_hour_df.query("not social and not light")["dist_forage"]

light_stat, light_p = stats.ttest_ind(

light_social, light_solo, alternative="two-sided", equal_var=False

)

dark_stat, dark_p = stats.ttest_ind(

dark_social, dark_solo, alternative="two-sided", equal_var=False

)

test_text = (

f"Two-sample t-tests:\n"

f"Light conditions: p = {light_p:.2e}\nDark conditions: p = {dark_p:.2e}"

)

props = dict(boxstyle="round,pad=0.3", facecolor="lightgray", alpha=0.8)

ax.text(

0.02,

0.68, # Position below the legend (since legend is upper left)

test_text,

transform=ax.transAxes,

verticalalignment="top",

bbox=props,

)

plt.tight_layout()

plt.show()

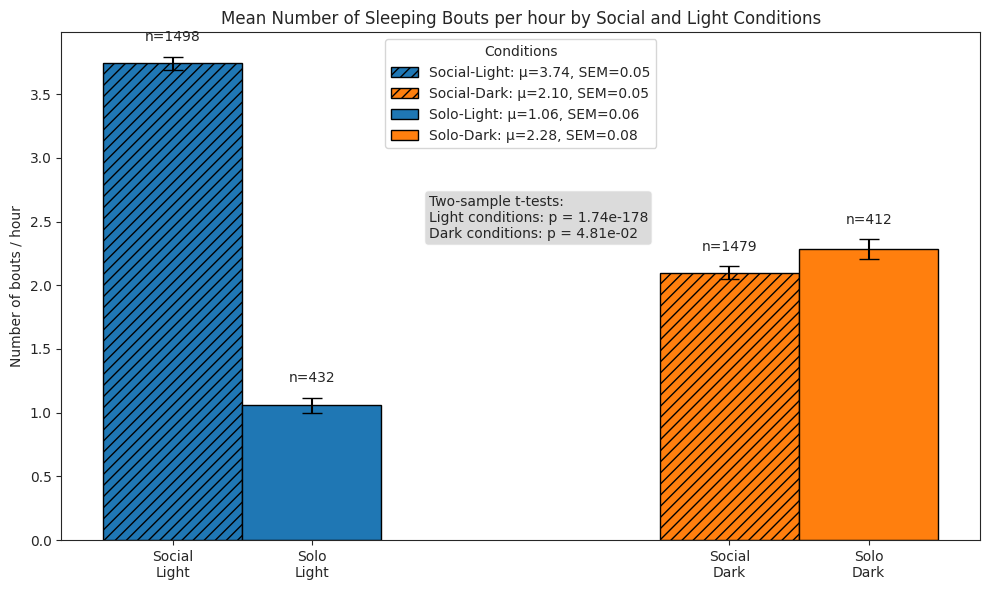

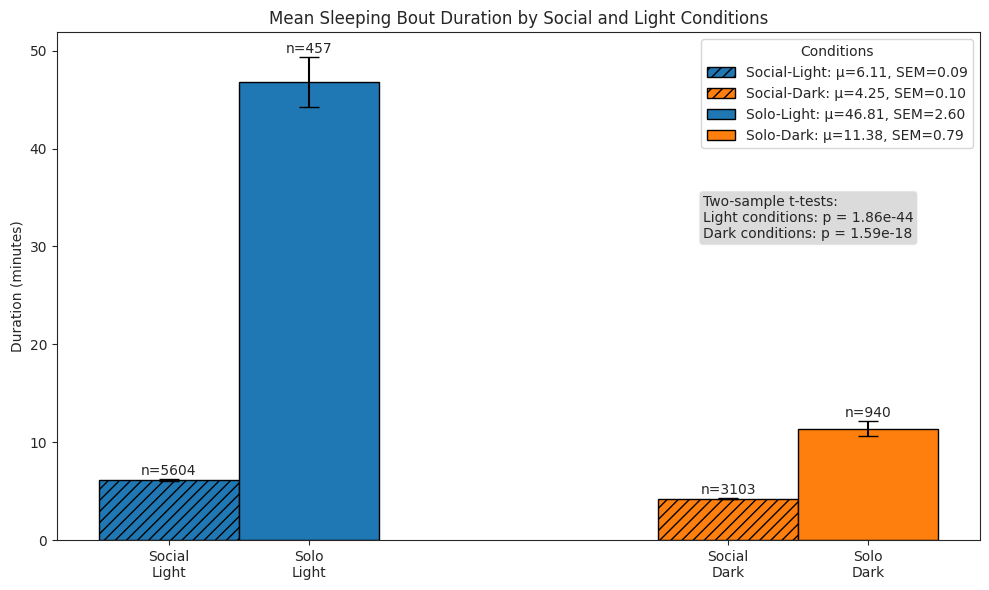

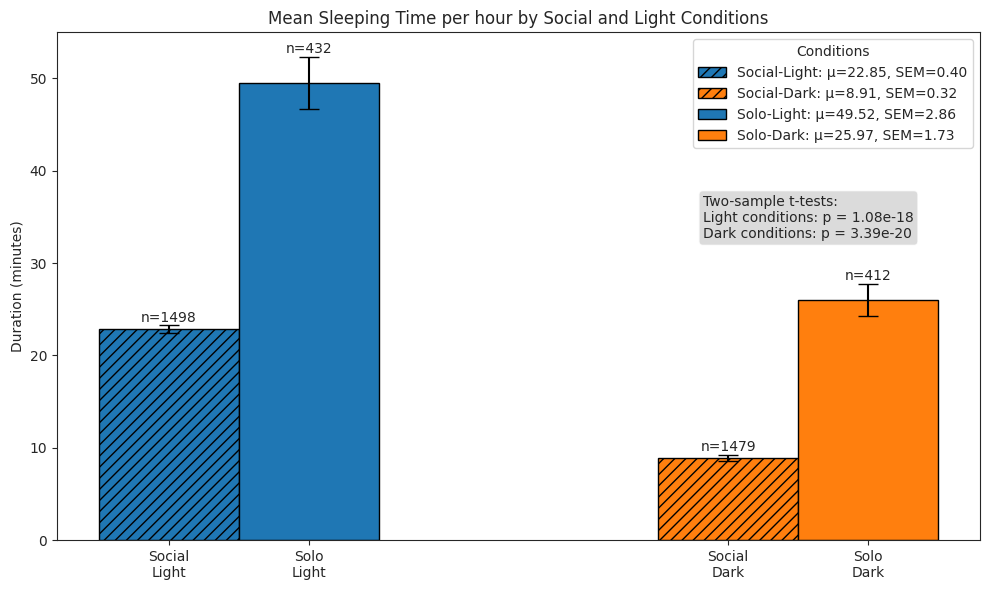

Sleeping#

We compare subjects’ sleeping behaviour in solo and social conditions across light and dark cycles by quantifying the following:

number of sleeping bouts per hour

duration of bouts

total time spent sleeping per hour

sleep_dur_df = pd.DataFrame(

{

"subject": pd.Series(dtype="string"),

"start": pd.Series(dtype="datetime64[ns]"),

"end": pd.Series(dtype="datetime64[ns]"),

"duration": pd.Series(dtype="float"), # in minutes

"period": pd.Series(dtype="string"),

"light": pd.Series(dtype="bool"),

}

)

sleep_hour_df = pd.DataFrame(

{

"subject": pd.Series(dtype="string"),

"hour": pd.Series(dtype="datetime64[ns]"),

"n_bouts": pd.Series(dtype="int"),

"duration": pd.Series(dtype="float"), # in minutes

"period": pd.Series(dtype="string"),

"light": pd.Series(dtype="bool"),

}

)

exp_pbar = tqdm(experiments, desc="Experiments", position=0, leave=True)

for exp in exp_pbar:

period_pbar = tqdm(periods, desc="Periods", position=1, leave=False)

for period in period_pbar:

sleep_bouts_df = load_data_from_parquet(

experiment_name=exp["name"],

period=period,

data_type="sleep",

data_dir=data_dir,

set_time_index=True,

)

# Get sleep bout durations

hour = sleep_bouts_df["start"].dt.hour

sleep_bouts_df["light"] = ~((hour > light_off) & (hour < light_on))

sleep_dur_df = pd.concat([sleep_dur_df, sleep_bouts_df], ignore_index=True)

# Get n sleep bouts and total duration per hour

for subject in sleep_bouts_df["subject"].unique():

sleep_df_subj = sleep_bouts_df[sleep_bouts_df["subject"] == subject]

sleep_df_subj["hour"] = sleep_df_subj["start"].dt.floor("h")

hour_stats = (

sleep_df_subj.groupby("hour")

.agg({"duration": ["count", "sum"]})

.reset_index()

)

hour_stats.columns = ["hour", "n_bouts", "duration"]

min_hour, max_hour = (

sleep_df_subj["hour"].min(),

sleep_df_subj["hour"].max(),

)

complete_hours = pd.DataFrame(

{"hour": pd.date_range(start=min_hour, end=max_hour, freq="h")}

)

sleep_df_subj_hour = pd.merge(

complete_hours, hour_stats, on="hour", how="left"

).fillna(0)

sleep_df_subj_hour["n_bouts"] = sleep_df_subj_hour["n_bouts"].astype(int)

sleep_df_subj_hour["period"] = period

sleep_df_subj_hour["subject"] = subject

hour = sleep_df_subj_hour["hour"].dt.hour

sleep_df_subj_hour["light"] = ~((hour > light_off) & (hour < light_on))

sleep_hour_df = pd.concat(

[sleep_hour_df, sleep_df_subj_hour], ignore_index=True

)

sleep_dur_df["duration"] = (

pd.to_timedelta(sleep_dur_df["duration"]).dt.total_seconds() / 60

)

sleep_hour_df["duration"] = (

pd.to_timedelta(sleep_hour_df["duration"]).dt.total_seconds() / 60

)

sleep_hour_df.head()

| subject | hour | n_bouts | duration | period | light | |

|---|---|---|---|---|---|---|

| 0 | BAA-1104045 | 2024-02-09 18:00:00 | 1 | 2.0 | social | False |

| 1 | BAA-1104045 | 2024-02-09 19:00:00 | 3 | 16.0 | social | False |

| 2 | BAA-1104045 | 2024-02-09 20:00:00 | 4 | 18.0 | social | True |

| 3 | BAA-1104045 | 2024-02-09 21:00:00 | 3 | 41.0 | social | True |

| 4 | BAA-1104045 | 2024-02-09 22:00:00 | 3 | 63.0 | social | True |

sleep_dur_df.head()

| subject | start | end | duration | period | light | |

|---|---|---|---|---|---|---|

| 0 | BAA-1104045 | 2024-02-09 18:24:42.540 | 2024-02-09 18:26:42.540 | 2.0 | social | False |

| 1 | BAA-1104045 | 2024-02-09 19:18:42.540 | 2024-02-09 19:23:42.540 | 5.0 | social | False |

| 2 | BAA-1104045 | 2024-02-09 19:50:42.540 | 2024-02-09 19:53:42.540 | 3.0 | social | False |

| 3 | BAA-1104045 | 2024-02-09 19:59:42.540 | 2024-02-09 20:07:42.540 | 8.0 | social | False |

| 4 | BAA-1104045 | 2024-02-09 20:12:42.540 | 2024-02-09 20:15:42.540 | 3.0 | social | True |

# Plot bars of bouts per hour

fig, ax = plt.subplots(figsize=(10, 6))

summary_data = []

for social_val in ["social", "postsocial"]:

for light_val in [True, False]:

subset = sleep_hour_df[

(sleep_hour_df["period"] == social_val)

& (sleep_hour_df["light"] == light_val)

]

mean_n_bouts = subset["n_bouts"].mean()

sem_n_bouts = subset["n_bouts"].sem()

n_samples = len(subset)

summary_data.append(

{

"social": social_val,

"light": light_val,

"mean_n_bouts": mean_n_bouts,

"sem": sem_n_bouts,

"condition": (

f"{'Social' if social_val == 'social' else 'Solo'}-"

f"{'Light' if light_val else 'Dark'}"

),

"n": n_samples,

}

)

summary_df = pd.DataFrame(summary_data)

# Set up positions for the bars

bar_width = 0.5

x_pos = np.array([0.25, 2.25, 0.75, 2.75]) # create two groups with a gap in the middle

# Plot bars

for i, row in enumerate(summary_data):

pos = x_pos[i]

social_val = row["social"]

light_val = row["light"]

bar = ax.bar(

pos,

row["mean_n_bouts"],

bar_width,

yerr=row["sem"],

color=colors[light_val],

edgecolor="black",

capsize=7,

label=f"{row['condition']}: μ={row['mean_n_bouts']:.2f}, SEM={row['sem']:.2f}",

)

# Apply hatching for social conditions

if hatches[social_val == "social"]:

bar[0].set_hatch(hatches[social_val == "social"])

# Add sample size as text above each bar

sample_size_txt = ax.text(

pos,

row["mean_n_bouts"] + row["sem"] + 0.1,

f"n={row['n']}",

ha="center",

va="bottom",

)

ax.set_title("Mean Number of Sleeping Bouts per hour by Social and Light Conditions")

ax.set_ylabel("Number of bouts / hour")

ax.set_xticks(x_pos)

ax.set_xticklabels(["Social\nLight", "Social\nDark", "Solo\nLight", "Solo\nDark"])

ax.legend(title="Conditions", loc="upper center")

# Perform stats tests

light_social = sleep_hour_df.query("period == 'social' and light")["n_bouts"]

light_solo = sleep_hour_df.query("period == 'postsocial' and light")["n_bouts"]

dark_social = sleep_hour_df.query("period == 'social' and not light")["n_bouts"]

dark_solo = sleep_hour_df.query("period == 'postsocial' and not light")["n_bouts"]

light_stat, light_p = stats.ttest_ind(

light_social, light_solo, alternative="two-sided", equal_var=False

)

dark_stat, dark_p = stats.ttest_ind(

dark_social, dark_solo, alternative="two-sided", equal_var=False

)

test_text = (

f"Two-sample t-tests:\n"

f"Light conditions: p = {light_p:.2e}"

f"\nDark conditions: p = {dark_p:.2e}"

)

props = dict(boxstyle="round,pad=0.3", facecolor="lightgray", alpha=0.8)

ax.text(

0.40,

0.68, # Position below the legend

test_text,

transform=ax.transAxes,

verticalalignment="top",

bbox=props,

)

plt.tight_layout()

plt.show()

sleep_dur_df.groupby(["period", "light"])["duration"].describe()

| count | mean | std | min | 25% | 50% | 75% | max | ||

|---|---|---|---|---|---|---|---|---|---|

| period | light | ||||||||

| postsocial | False | 940.0 | 11.382979 | 24.224019 | 2.0 | 2.0 | 3.0 | 6.0 | 265.0 |

| True | 457.0 | 46.809628 | 55.611719 | 2.0 | 5.0 | 19.0 | 84.0 | 470.0 | |

| social | False | 3103.0 | 4.245891 | 5.517260 | 2.0 | 2.0 | 3.0 | 4.0 | 117.0 |

| True | 5604.0 | 6.108851 | 6.398141 | 2.0 | 2.0 | 4.0 | 7.0 | 82.0 |

# Plot bars of bout durations

fig, ax = plt.subplots(figsize=(10, 6))

summary_data = []

for social_val in ["social", "postsocial"]:

for light_val in [True, False]:

subset = sleep_dur_df[

(sleep_dur_df["period"] == social_val)

& (sleep_dur_df["light"] == light_val)

]

mean_duration = subset["duration"].mean()

sem_duration = subset["duration"].sem()

n_samples = len(subset)

summary_data.append(

{

"social": social_val,

"light": light_val,

"mean_duration": mean_duration,

"sem": sem_duration,

"condition": (

f"{'Social' if social_val == 'social' else 'Solo'}-"

f"{'Light' if light_val else 'Dark'}"

),

"n": n_samples,

}

)

summary_df = pd.DataFrame(summary_data)

# Set up positions for the bars

bar_width = 0.5

x_pos = np.array([0.25, 2.25, 0.75, 2.75]) # create two groups with a gap in the middle

# Plot bars

for i, row in enumerate(summary_data):

pos = x_pos[i]

social_val = row["social"]

light_val = row["light"]

bar = ax.bar(

pos,

row["mean_duration"],

bar_width,

yerr=row["sem"],

color=colors[light_val],

edgecolor="black",

capsize=7,

label=f"{row['condition']}: μ={row['mean_duration']:.2f}, SEM={row['sem']:.2f}",

)

# Apply hatching for social conditions

if hatches[social_val == "social"]:

bar[0].set_hatch(hatches[social_val == "social"])

# Add sample size as text above each bar

sample_size_txt = ax.text(

pos,

row["mean_duration"] + row["sem"] + 0.1,

f"n={row['n']}",

ha="center",

va="bottom",

)

ax.set_title("Mean Sleeping Bout Duration by Social and Light Conditions")

ax.set_ylabel("Duration (minutes)")

ax.set_xticks(x_pos)

ax.set_xticklabels(["Social\nLight", "Social\nDark", "Solo\nLight", "Solo\nDark"])

ax.legend(title="Conditions")

# Perform stats tests

light_social = sleep_dur_df.query("period == 'social' and light")["duration"]

light_solo = sleep_dur_df.query("period == 'postsocial' and light")["duration"]

dark_social = sleep_dur_df.query("period == 'social' and not light")["duration"]

dark_solo = sleep_dur_df.query("period == 'postsocial' and not light")["duration"]

light_stat, light_p = stats.ttest_ind(

light_social, light_solo, alternative="two-sided", equal_var=False

)

dark_stat, dark_p = stats.ttest_ind(

dark_social, dark_solo, alternative="two-sided", equal_var=False

)

test_text = (

f"Two-sample t-tests:\n"

f"Light conditions: p = {light_p:.2e}"

f"\nDark conditions: p = {dark_p:.2e}"

)

props = dict(boxstyle="round,pad=0.3", facecolor="lightgray", alpha=0.8)

ax.text(

0.70,

0.68, # Position below the legend

test_text,

transform=ax.transAxes,

verticalalignment="top",

bbox=props,

)

plt.tight_layout()

plt.show()

# Plot total time spent sleeping per hour

fig, ax = plt.subplots(figsize=(10, 6))

summary_data = []

for social_val in ["social", "postsocial"]:

for light_val in [True, False]:

subset = sleep_hour_df[

(sleep_hour_df["period"] == social_val)

& (sleep_hour_df["light"] == light_val)

]

mean_duration = subset["duration"].mean()

sem_duration = subset["duration"].sem()

n_samples = len(subset)

summary_data.append(

{

"social": social_val,

"light": light_val,

"mean_duration": mean_duration,

"sem": sem_duration,

"condition": (

f"{'Social' if social_val == 'social' else 'Solo'}-"

f"{'Light' if light_val else 'Dark'}"

),

"n": n_samples,

}

)

summary_df = pd.DataFrame(summary_data)

# Set up positions for the bars

bar_width = 0.5

x_pos = np.array([0.25, 2.25, 0.75, 2.75]) # create two groups with a gap in the middle

# Plot bars

for i, row in enumerate(summary_data):

pos = x_pos[i]

social_val = row["social"]

light_val = row["light"]

bar = ax.bar(

pos,

row["mean_duration"],

bar_width,

yerr=row["sem"],

color=colors[light_val],

edgecolor="black",

capsize=7,

label=f"{row['condition']}: μ={row['mean_duration']:.2f}, SEM={row['sem']:.2f}",

)

# Apply hatching for social conditions

if hatches[social_val == "social"]:

bar[0].set_hatch(hatches[social_val == "social"])

# Add sample size as text above each bar

sample_size_txt = ax.text(

pos,

row["mean_duration"] + row["sem"] + 0.1,

f"n={row['n']}",

ha="center",

va="bottom",

)

ax.set_title("Mean Sleeping Time per hour by Social and Light Conditions")

ax.set_ylabel("Duration (minutes)")

ax.set_xticks(x_pos)

ax.set_xticklabels(["Social\nLight", "Social\nDark", "Solo\nLight", "Solo\nDark"])

ax.legend(title="Conditions")

# Perform stats tests

light_social = sleep_hour_df.query("period == 'social' and light")["duration"]

light_solo = sleep_hour_df.query("period == 'postsocial' and light")["duration"]

dark_social = sleep_hour_df.query("period == 'social' and not light")["duration"]

dark_solo = sleep_hour_df.query("period == 'postsocial' and not light")["duration"]

light_stat, light_p = stats.ttest_ind(

light_social, light_solo, alternative="two-sided", equal_var=False

)

dark_stat, dark_p = stats.ttest_ind(

dark_social, dark_solo, alternative="two-sided", equal_var=False

)

test_text = (

f"Two-sample t-tests:\n"

f"Light conditions: p = {light_p:.2e}"

f"\nDark conditions: p = {dark_p:.2e}"

)

props = dict(boxstyle="round,pad=0.3", facecolor="lightgray", alpha=0.8)

ax.text(

0.70,

0.68, # Position below the legend

test_text,

transform=ax.transAxes,

verticalalignment="top",

bbox=props,

)

plt.tight_layout()

plt.show()

Drinking#

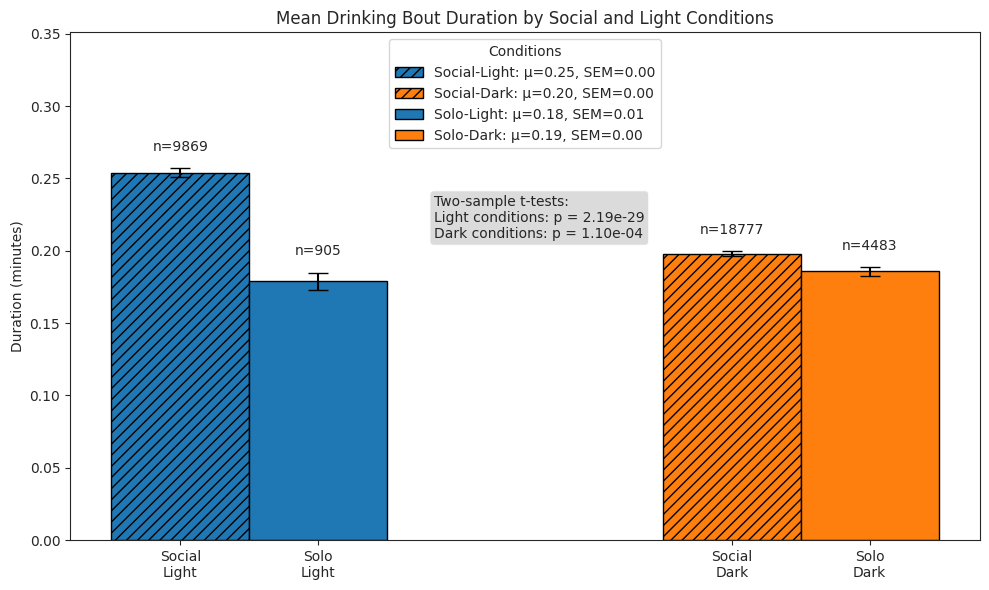

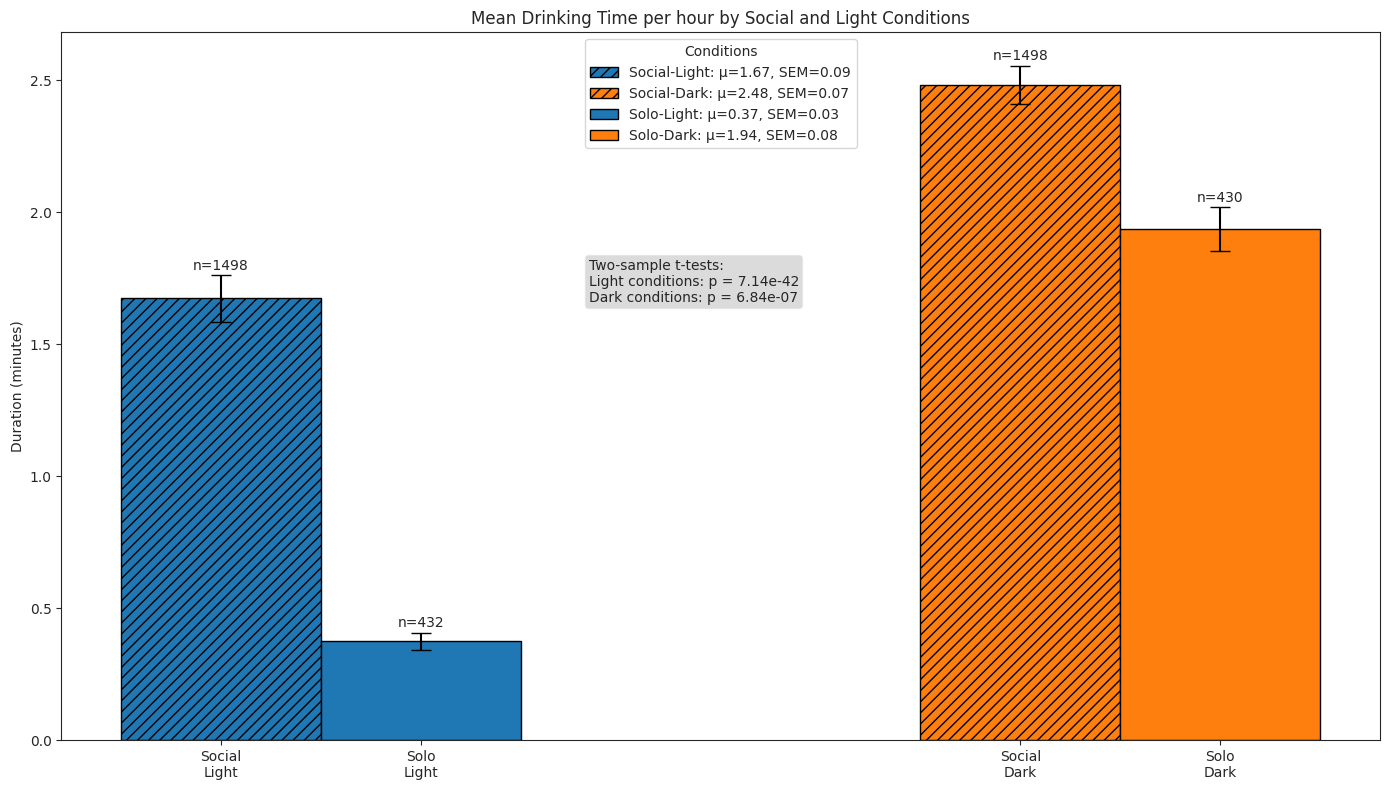

We compare subjects’ drinking behaviour in solo and social conditions across light and dark cycles by quantifying the following:

number of drinking bouts per hour

duration of bouts

total time spent drinking per hour

drink_dur_df = pd.DataFrame(

{

"subject": pd.Series(dtype="string"),

"start": pd.Series(dtype="datetime64[ns]"),

"end": pd.Series(dtype="datetime64[ns]"),

"duration": pd.Series(dtype="float"), # in minutes

"period": pd.Series(dtype="string"),

"light": pd.Series(dtype="bool"),

}

)

drink_hour_df = pd.DataFrame(

{

"subject": pd.Series(dtype="string"),

"hour": pd.Series(dtype="datetime64[ns]"),

"n_bouts": pd.Series(dtype="int"),

"duration": pd.Series(dtype="float"), # in minutes

"period": pd.Series(dtype="string"),

"light": pd.Series(dtype="bool"),

}

)

exp_pbar = tqdm(experiments, desc="Experiments", position=0, leave=True)

for exp in exp_pbar:

if exp["name"] == "social0.3-aeon4":

continue # Skip this experiment as the data is not available

period_pbar = tqdm(periods, desc="Periods", position=1, leave=False)

for period in period_pbar:

sleep_bouts_df = load_data_from_parquet(

experiment_name=exp["name"],

period=period,

data_type="drink",

data_dir=data_dir,

set_time_index=True,

)

# Get drink bout durations

hour = sleep_bouts_df["start"].dt.hour

sleep_bouts_df["light"] = ~((hour > light_off) & (hour < light_on))

drink_dur_df = pd.concat([drink_dur_df, sleep_bouts_df], ignore_index=True)

# Get n drink bouts and total duration per hour

for subject in sleep_bouts_df["subject"].unique():

sleep_df_subj = sleep_bouts_df[sleep_bouts_df["subject"] == subject]

sleep_df_subj["hour"] = sleep_df_subj["start"].dt.floor("h")

hour_stats = (

sleep_df_subj.groupby("hour")

.agg({"duration": ["count", "sum"]})

.reset_index()

)

hour_stats.columns = ["hour", "n_bouts", "duration"]

min_hour, max_hour = (

sleep_df_subj["hour"].min(),

sleep_df_subj["hour"].max(),

)

complete_hours = pd.DataFrame(

{"hour": pd.date_range(start=min_hour, end=max_hour, freq="h")}

)

sleep_df_subj_hour = pd.merge(

complete_hours, hour_stats, on="hour", how="left"

).fillna(0)

sleep_df_subj_hour["n_bouts"] = sleep_df_subj_hour["n_bouts"].astype(int)

sleep_df_subj_hour["period"] = period

sleep_df_subj_hour["subject"] = subject

hour = sleep_df_subj_hour["hour"].dt.hour

sleep_df_subj_hour["light"] = ~((hour > light_off) & (hour < light_on))

drink_hour_df = pd.concat(

[drink_hour_df, sleep_df_subj_hour], ignore_index=True

)

drink_dur_df["duration"] = (

pd.to_timedelta(drink_dur_df["duration"]).dt.total_seconds() / 60

)

drink_hour_df["duration"] = (

pd.to_timedelta(drink_hour_df["duration"]).dt.total_seconds() / 60

)

drink_hour_df.head()

| subject | hour | n_bouts | duration | period | light | |

|---|---|---|---|---|---|---|

| 0 | BAA-1104045 | 2024-02-09 16:00:00 | 6 | 0.376667 | social | False |

| 1 | BAA-1104045 | 2024-02-09 17:00:00 | 16 | 5.088333 | social | False |

| 2 | BAA-1104045 | 2024-02-09 18:00:00 | 18 | 3.176667 | social | False |

| 3 | BAA-1104045 | 2024-02-09 19:00:00 | 23 | 4.486667 | social | False |

| 4 | BAA-1104045 | 2024-02-09 20:00:00 | 11 | 1.083333 | social | True |

drink_dur_df.head()

| subject | start | end | duration | period | light | |

|---|---|---|---|---|---|---|

| 0 | BAA-1104045 | 2024-02-09 16:34:06.000 | 2024-02-09 16:34:11.200 | 0.086667 | social | False |

| 1 | BAA-1104045 | 2024-02-09 16:34:15.100 | 2024-02-09 16:34:18.600 | 0.058333 | social | False |

| 2 | BAA-1104045 | 2024-02-09 16:38:13.100 | 2024-02-09 16:38:16.600 | 0.058333 | social | False |

| 3 | BAA-1104045 | 2024-02-09 16:38:34.900 | 2024-02-09 16:38:38.500 | 0.060000 | social | False |

| 4 | BAA-1104045 | 2024-02-09 16:42:16.400 | 2024-02-09 16:42:19.500 | 0.051667 | social | False |

# Plot bars for number of drinking bouts per hour

fig, ax = plt.subplots(figsize=(10, 6))

summary_data = []

for social_val in ["social", "postsocial"]:

for light_val in [True, False]:

subset = drink_hour_df[

(drink_hour_df["period"] == social_val)

& (drink_hour_df["light"] == light_val)

]

mean_n_bouts = subset["n_bouts"].mean()

sem_n_bouts = subset["n_bouts"].sem()

n_samples = len(subset)

summary_data.append(

{

"social": social_val,

"light": light_val,

"mean_n_bouts": mean_n_bouts,

"sem": sem_n_bouts,

"condition": (

f"{'Social' if social_val == 'social' else 'Solo'}-"

f"{'Light' if light_val else 'Dark'}"

),

"n": n_samples,

}

)

summary_df = pd.DataFrame(summary_data)

# Set up positions for the bars

bar_width = 0.5

x_pos = np.array([0.25, 2.25, 0.75, 2.75]) # create two groups with a gap in the middle

# Plot bars

for i, row in enumerate(summary_data):

pos = x_pos[i]

social_val = row["social"]

light_val = row["light"]

bar = ax.bar(

pos,

row["mean_n_bouts"],

bar_width,

yerr=row["sem"],

color=colors[light_val],

edgecolor="black",

capsize=7,

label=f"{row['condition']}: μ={row['mean_n_bouts']:.2f}, SEM={row['sem']:.2f}",

)

# Apply hatching for social conditions

if hatches[social_val == "social"]:

bar[0].set_hatch(hatches[social_val == "social"])

# Add sample size as text above each bar

sample_size_txt = ax.text(

pos,

row["mean_n_bouts"] + row["sem"] + 0.1,

f"n={row['n']}",

ha="center",

va="bottom",

)

ax.set_title("Mean Number of Drinking Bouts per hour by Social and Light Conditions")

ax.set_ylabel("Number of bouts / hour")

ax.set_xticks(x_pos)

ax.set_xticklabels(["Social\nLight", "Social\nDark", "Solo\nLight", "Solo\nDark"])

# ax.set_ylim([0, 2.01])

ax.legend(title="Conditions")

# Perform stats tests

light_social = drink_hour_df.query("period == 'social' and light")["n_bouts"]

light_solo = drink_hour_df.query("period == 'postsocial' and light")["n_bouts"]

dark_social = drink_hour_df.query("period == 'social' and not light")["n_bouts"]

dark_solo = drink_hour_df.query("period == 'postsocial' and not light")["n_bouts"]

light_stat, light_p = stats.ttest_ind(

light_social, light_solo, alternative="two-sided", equal_var=False

)

dark_stat, dark_p = stats.ttest_ind(

dark_social, dark_solo, alternative="two-sided", equal_var=False

)

test_text = (

f"Two-sample t-tests:\n"

f"Light conditions: p = {light_p:.2e}"

f"\nDark conditions: p = {dark_p:.2e}"

)

props = dict(boxstyle="round,pad=0.3", facecolor="lightgray", alpha=0.8)

ax.text(

0.01,

0.7, # Position below the legend

test_text,

transform=ax.transAxes,

verticalalignment="top",

bbox=props,

)

plt.tight_layout()

plt.show()

# Plot bars of durations of bouts

fig, ax = plt.subplots(figsize=(10, 6))

summary_data = []

for social_val in ["social", "postsocial"]:

for light_val in [True, False]:

subset = drink_dur_df[

(drink_dur_df["period"] == social_val)

& (drink_dur_df["light"] == light_val)

]

mean_duration = subset["duration"].mean()

sem_duration = subset["duration"].sem()

n_samples = len(subset)

summary_data.append(

{

"social": social_val,

"light": light_val,

"mean_duration": mean_duration,

"sem": sem_duration,

"condition": (

f"{'Social' if social_val == 'social' else 'Solo'}-"

f"{'Light' if light_val else 'Dark'}"

),

"n": n_samples,

}

)

summary_df = pd.DataFrame(summary_data)

# Set up positions for the bars

bar_width = 0.5

x_pos = np.array([0.25, 2.25, 0.75, 2.75]) # create two groups with a gap in the middle

# Plot bars

for i, row in enumerate(summary_data):

pos = x_pos[i]

social_val = row["social"]

light_val = row["light"]

bar = ax.bar(

pos,

row["mean_duration"],

bar_width,

yerr=row["sem"],

color=colors[light_val],

edgecolor="black",

capsize=7,